mirror of

https://github.com/humanlayer/12-factor-agents.git

synced 2025-08-20 18:59:53 +03:00

422 lines

21 KiB

YAML

422 lines

21 KiB

YAML

title: "Building the 12-factor agent template from scratch in Python"

|

|

text: "Steps to start from a bare Python repo and build up a 12-factor agent. This walkthrough will guide you through creating a Python agent that follows the 12-factor methodology with BAML."

|

|

targets:

|

|

- ipynb: "./build/workshop-2025-07-16.ipynb"

|

|

sections:

|

|

- name: hello-world

|

|

title: "Chapter 0 - Hello World"

|

|

text: "Let's start with a basic Python setup and a hello world program."

|

|

steps:

|

|

- text: |

|

|

This guide will walk you through building agents in Python with BAML.

|

|

|

|

We'll start simple with a hello world program and gradually build up to a full agent.

|

|

|

|

For this notebook, you'll need to have your OpenAI API key saved in Google Colab secrets.

|

|

|

|

## Where We're Headed

|

|

|

|

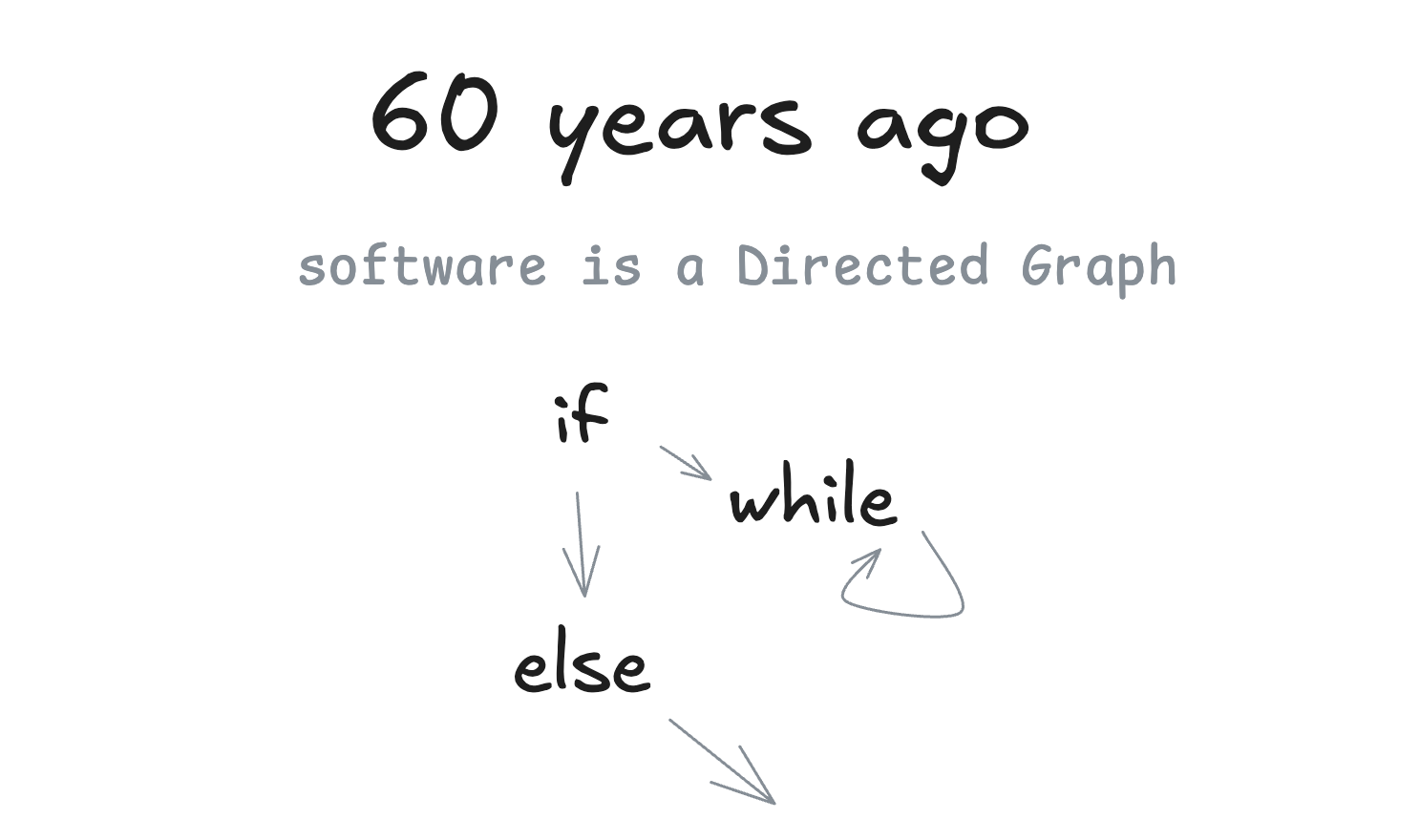

Before we dive in, let's understand the journey ahead. We're building toward **micro-agents in deterministic DAGs** - a powerful pattern that combines the flexibility of AI with the reliability of traditional software.

|

|

|

|

📖 **Learn more**: [A Brief History of Software](https://github.com/humanlayer/12-factor-agents/blob/main/content/brief-history-of-software.md)

|

|

|

|

|

|

|

|

- text: "Here's our simple hello world program:"

|

|

- file: {src: ./walkthrough/00-main.py}

|

|

- text: "Let's run it to verify it works:"

|

|

- run_main: {regenerate_baml: false}

|

|

|

|

- name: cli-and-agent

|

|

title: "Chapter 1 - CLI and Agent Loop"

|

|

text: "Now let's add BAML and create our first agent with a CLI interface."

|

|

steps:

|

|

- text: |

|

|

In this chapter, we'll integrate BAML to create an AI agent that can respond to user input.

|

|

|

|

## What is BAML?

|

|

|

|

BAML (Boundary Markup Language) is a domain-specific language designed to help developers build reliable AI workflows and agents. Created by [BoundaryML](https://www.boundaryml.com/) (a Y Combinator W23 company), BAML adds the engineering to prompt engineering.

|

|

|

|

### Why BAML?

|

|

|

|

- **Type-safe outputs**: Get fully type-safe outputs from LLMs, even when streaming

|

|

- **Language agnostic**: Works with Python, TypeScript, Ruby, Go, and more

|

|

- **LLM agnostic**: Works with any LLM provider (OpenAI, Anthropic, etc.)

|

|

- **Better performance**: State-of-the-art structured outputs that outperform even OpenAI's native function calling

|

|

- **Developer-friendly**: Native VSCode extension with syntax highlighting, autocomplete, and interactive playground

|

|

|

|

### Learn More

|

|

|

|

- 📚 [Official Documentation](https://docs.boundaryml.com/home)

|

|

- 💻 [GitHub Repository](https://github.com/BoundaryML/baml)

|

|

- 🎯 [What is BAML?](https://docs.boundaryml.com/guide/introduction/what-is-baml)

|

|

- 📖 [BAML Examples](https://github.com/BoundaryML/baml-examples)

|

|

- 🏢 [Company Website](https://www.boundaryml.com/)

|

|

- 📰 [Blog: AI Agents Need a New Syntax](https://www.boundaryml.com/blog/ai-agents-need-new-syntax)

|

|

|

|

BAML turns prompt engineering into schema engineering, where you focus on defining the structure of your data rather than wrestling with prompts. This approach leads to more reliable and maintainable AI applications.

|

|

|

|

### Note on Developer Experience

|

|

|

|

BAML works much better in VS Code with their official extension, which provides syntax highlighting, autocomplete, inline testing, and an interactive playground. However, for this notebook tutorial, we'll work with BAML files directly without the enhanced IDE features.

|

|

|

|

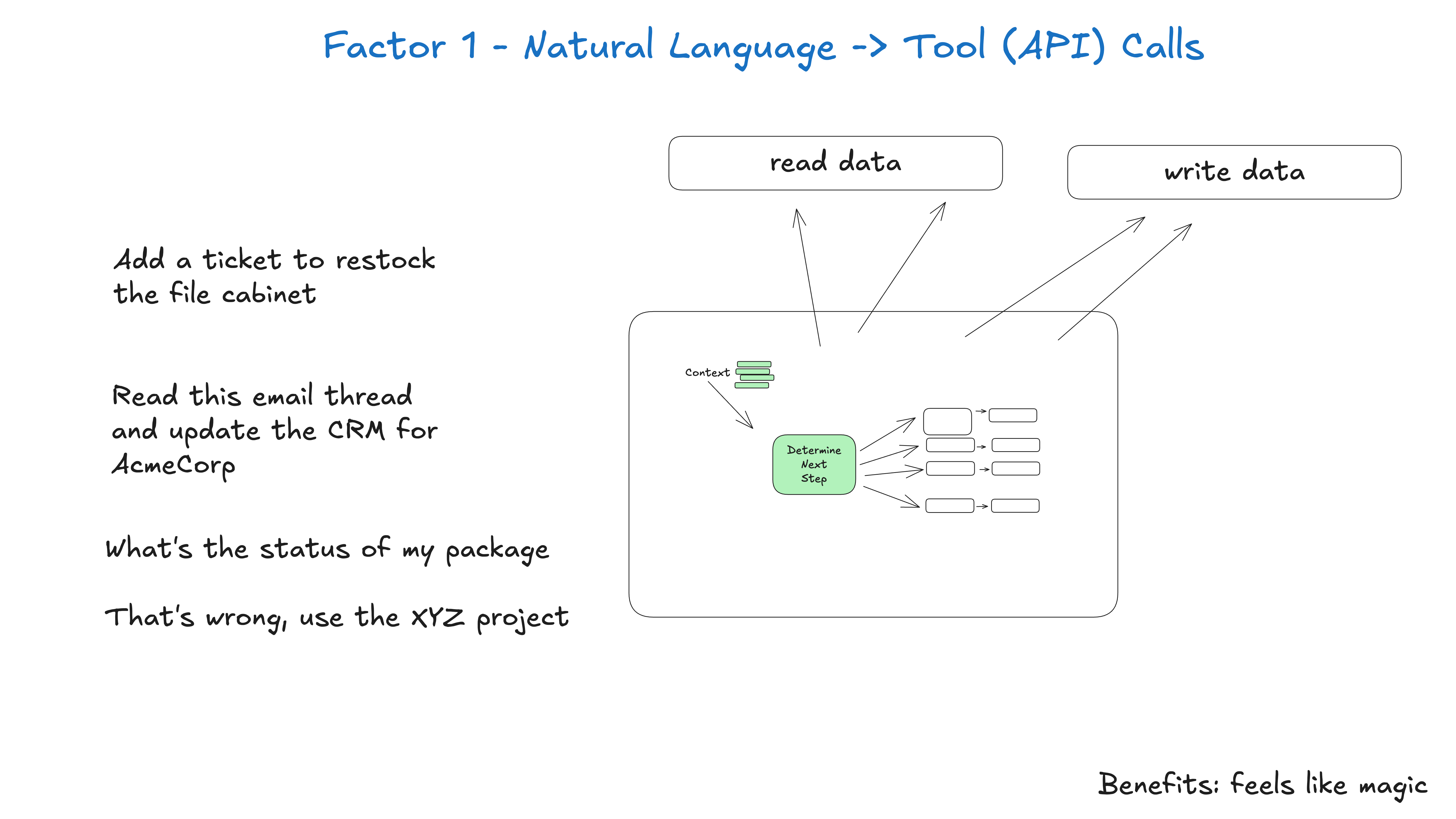

## Factor 1: Natural Language to Tool Calls

|

|

|

|

What we're building implements the first factor of 12-factor agents - converting natural language into structured tool calls.

|

|

|

|

📖 **Learn more**: [Factor 1: Natural Language to Tool Calls](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-01-natural-language-to-tool-calls.md)

|

|

|

|

|

|

|

|

First, let's set up BAML support in our notebook.

|

|

- baml_setup: true

|

|

- text: |

|

|

Now let's create our agent that will use BAML to process user input.

|

|

|

|

First, we'll define the core agent logic:

|

|

- file: {src: ./walkthrough/01-agent.py}

|

|

- text: |

|

|

Next, we need to define the BAML function that our agent will use.

|

|

|

|

### Understanding BAML Syntax

|

|

|

|

BAML files define:

|

|

- **Classes**: Structured output schemas (like `DoneForNow` below)

|

|

- **Functions**: AI-powered functions that take inputs and return structured outputs

|

|

- **Tests**: Example inputs/outputs to validate your prompts

|

|

|

|

This BAML file defines what our agent can do:

|

|

- fetch_file: {src: ./walkthrough/01-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Now let's create our main function that accepts a message parameter:

|

|

- file: {src: ./walkthrough/01-main.py}

|

|

- text: |

|

|

Let's test our agent! Try calling main() with different messages:

|

|

- `main("What's the weather like?")`

|

|

- `main("Tell me a joke")`

|

|

- `main("How are you doing today?")`

|

|

- run_main: {regenerate_baml: true, args: "Hello from the Python notebook!"}

|

|

|

|

- name: calculator-tools

|

|

title: "Chapter 2 - Add Calculator Tools"

|

|

text: "Let's add some calculator tools to our agent."

|

|

steps:

|

|

- text: |

|

|

Let's start by adding a tool definition for the calculator.

|

|

|

|

These are simple structured outputs that we'll ask the model to

|

|

return as a "next step" in the agentic loop.

|

|

|

|

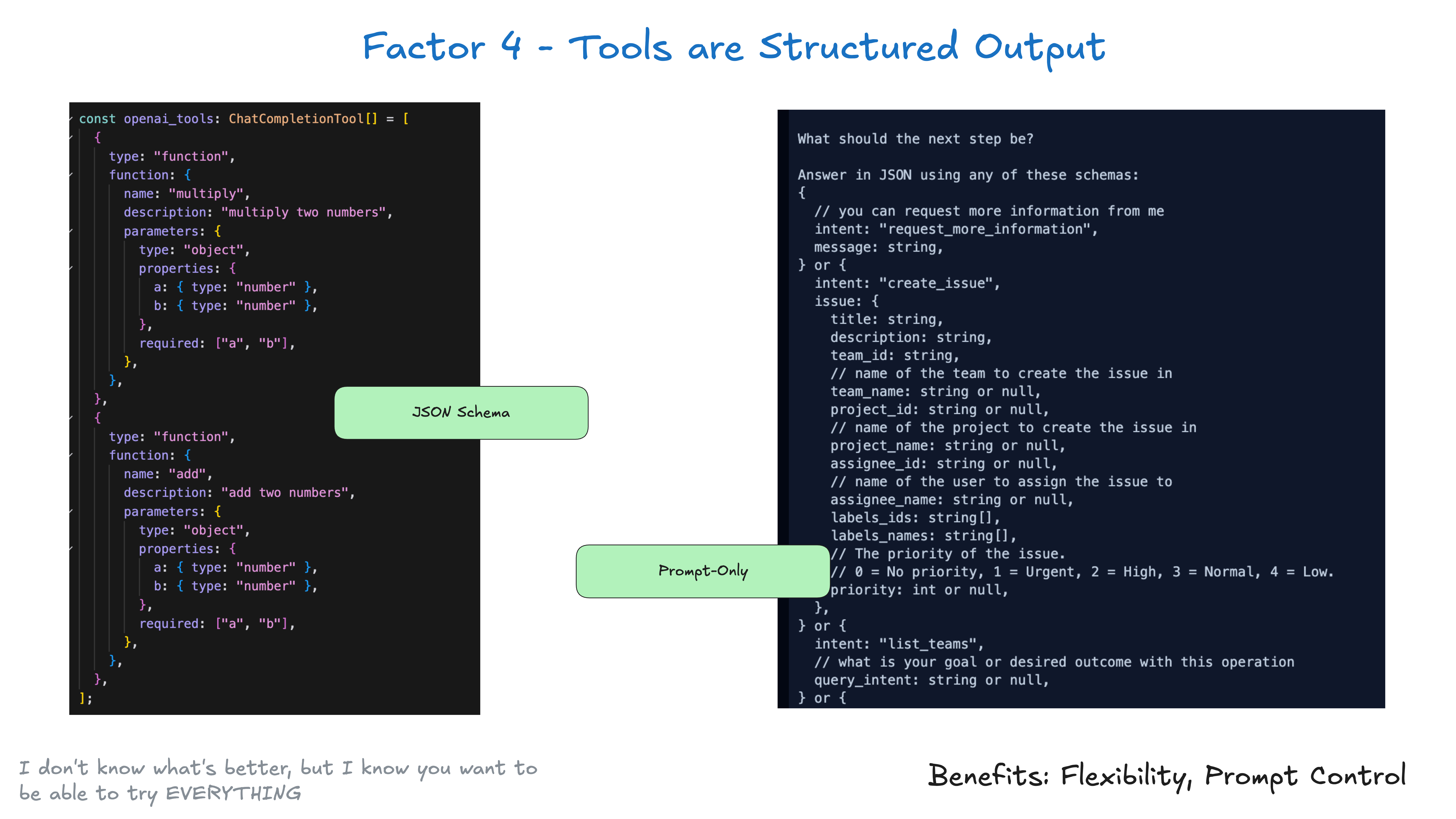

## Factor 4: Tools Are Structured Outputs

|

|

|

|

This chapter demonstrates that tools are just structured JSON outputs from the LLM - nothing more complex!

|

|

|

|

📖 **Learn more**: [Factor 4: Tools Are Structured Outputs](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-04-tools-are-structured-outputs.md)

|

|

|

|

|

|

|

|

- fetch_file: {src: ./walkthrough/02-tool_calculator.baml, dest: baml_src/tool_calculator.baml}

|

|

- text: |

|

|

Now, let's update the agent's DetermineNextStep method to

|

|

expose the calculator tools as potential next steps.

|

|

|

|

- fetch_file: {src: ./walkthrough/02-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Now let's update our main function to show the tool call:

|

|

- file: {src: ./walkthrough/02-main.py}

|

|

- text: |

|

|

Let's try out the calculator! The agent should recognize that you want to perform a calculation

|

|

and return the appropriate tool call instead of just a message.

|

|

- run_main: {regenerate_baml: true, args: "can you add 3 and 4"}

|

|

|

|

- name: tool-loop

|

|

title: "Chapter 3 - Process Tool Calls in a Loop"

|

|

text: "Now let's add a real agentic loop that can run the tools and get a final answer from the LLM."

|

|

steps:

|

|

- text: |

|

|

In this chapter, we'll enhance our agent to process tool calls in a loop. This means:

|

|

- The agent can call multiple tools in sequence

|

|

- Each tool result is fed back to the agent

|

|

- The agent continues until it has a final answer

|

|

|

|

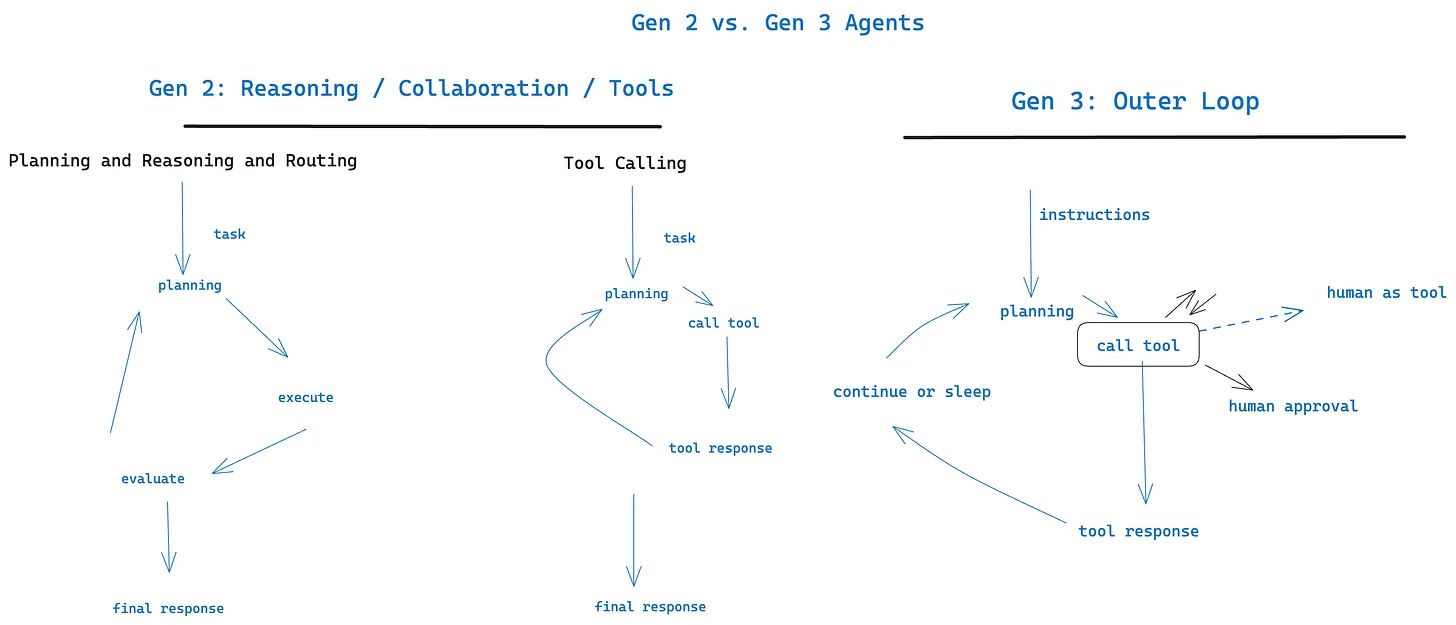

## The Agent Loop Pattern

|

|

|

|

We're implementing the core agent loop - where the AI makes decisions, executes tools, and continues until done.

|

|

|

|

|

|

|

|

## Factor 5: Unify Execution State

|

|

|

|

Notice how we're storing everything as events in our Thread - this is Factor 5 in action!

|

|

|

|

📖 **Learn more**: [Factor 5: Unify Execution State](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-05-unify-execution-state.md)

|

|

|

|

|

|

|

|

Let's update our agent to handle tool calls properly:

|

|

- file: {src: ./walkthrough/03-agent.py}

|

|

- text: |

|

|

Now let's update our main function to use the new agent loop:

|

|

- file: {src: ./walkthrough/03-main.py}

|

|

- text: |

|

|

Let's try it out! The agent should now call the tool and return the calculated result:

|

|

- run_main: {regenerate_baml: true, args: "can you add 3 and 4"}

|

|

- text: |

|

|

You should see the agent:

|

|

1. Recognize it needs to use the add tool

|

|

2. Call the tool with the correct parameters

|

|

3. Get the result (7)

|

|

4. Generate a final response incorporating the result

|

|

|

|

For more complex calculations, we need to handle all calculator operations. Let's add support for subtract, multiply, and divide:

|

|

- file: {src: ./walkthrough/03b-agent.py}

|

|

- text: |

|

|

Now let's test subtraction:

|

|

- run_main: {regenerate_baml: false, args: "can you subtract 3 from 4"}

|

|

- text: |

|

|

Test multiplication:

|

|

- run_main: {regenerate_baml: false, args: "can you multiply 3 and 4"}

|

|

- text: |

|

|

Finally, let's test a complex multi-step calculation:

|

|

- run_main: {regenerate_baml: false, args: "can you multiply 3 and 4, then divide the result by 2 and then add 12 to that result"}

|

|

- text: |

|

|

Congratulations! You've taken your first step into hand-rolling an agent loop.

|

|

|

|

Key concepts you've learned:

|

|

- **Thread Management**: Tracking conversation history and tool calls

|

|

- **Tool Execution**: Processing different tool types and returning results

|

|

- **Agent Loop**: Continuing until the agent has a final answer

|

|

|

|

From here, we'll start incorporating more intermediate and advanced concepts for 12-factor agents.

|

|

|

|

- name: baml-tests

|

|

title: "Chapter 4 - Add Tests to agent.baml"

|

|

text: "Let's add some tests to our BAML agent."

|

|

steps:

|

|

- text: |

|

|

In this chapter, we'll learn about BAML testing - a powerful feature that helps ensure your agents behave correctly.

|

|

|

|

## Why Test BAML Functions?

|

|

|

|

- **Catch regressions**: Ensure changes don't break existing behavior

|

|

- **Document behavior**: Tests serve as living documentation

|

|

- **Validate edge cases**: Test complex scenarios and conversation flows

|

|

- **CI/CD integration**: Run tests automatically in your pipeline

|

|

|

|

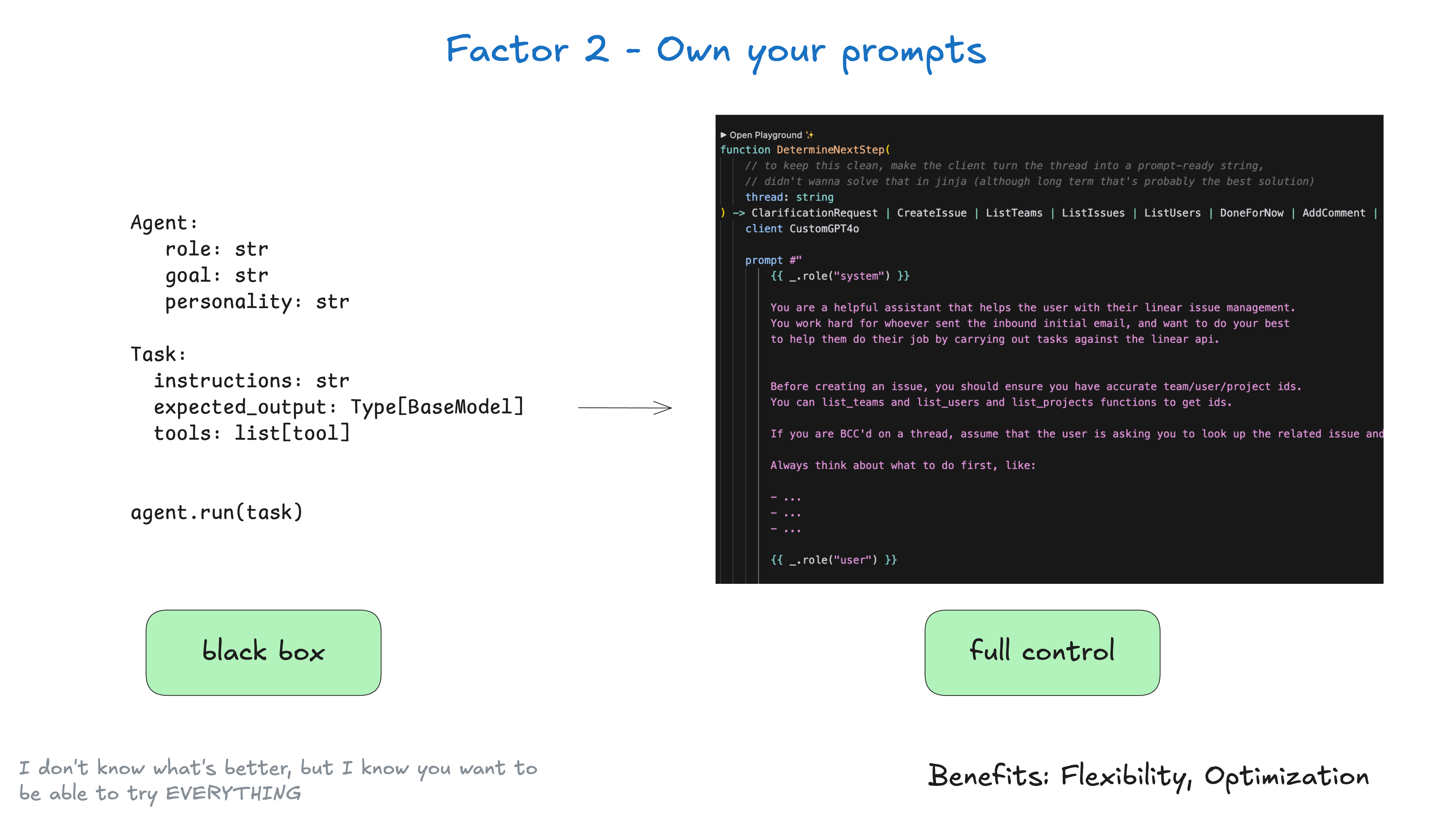

## Factor 2: Own Your Prompts

|

|

|

|

Testing is a key part of owning your prompts - you need to verify they work as expected!

|

|

|

|

📖 **Learn more**: [Factor 2: Own Your Prompts](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-02-own-your-prompts.md)

|

|

|

|

|

|

|

|

Let's start with a simple test that checks the agent's ability to handle basic interactions:

|

|

- fetch_file: {src: ./walkthrough/04-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Run the tests to see them in action:

|

|

- command: "!baml-cli test"

|

|

- text: |

|

|

Now let's improve the tests with assertions! Assertions let you verify specific properties of the agent's output.

|

|

|

|

## BAML Assertion Syntax

|

|

|

|

Assertions use the `@@assert` directive:

|

|

```

|

|

@@assert(name, {{condition}})

|

|

```

|

|

|

|

- `name`: A descriptive name for the assertion

|

|

- `condition`: A boolean expression using `this` to access the output

|

|

- fetch_file: {src: ./walkthrough/04b-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Run the tests again to see assertions in action:

|

|

- command: "!baml-cli test"

|

|

- text: |

|

|

Finally, let's add more complex test cases that test multi-step conversations.

|

|

|

|

These tests simulate an entire conversation flow, including:

|

|

- User input

|

|

- Tool calls made by the agent

|

|

- Tool responses

|

|

- Final agent response

|

|

- fetch_file: {src: ./walkthrough/04c-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Run the comprehensive test suite:

|

|

- command: "!baml-cli test"

|

|

- text: |

|

|

## Key Testing Concepts

|

|

|

|

1. **Test Structure**: Each test specifies functions, arguments, and assertions

|

|

2. **Progressive Testing**: Start simple, then test complex scenarios

|

|

3. **Conversation History**: Test how the agent handles multi-turn conversations

|

|

4. **Tool Integration**: Verify the agent correctly uses tools in sequence

|

|

|

|

With these tests in place, you can confidently modify your agent knowing that core functionality is protected by automated tests!

|

|

|

|

- name: human-tools

|

|

title: "Chapter 5 - Multiple Human Tools"

|

|

text: |

|

|

In this section, we'll add support for multiple tools that serve to contact humans.

|

|

steps:

|

|

- text: |

|

|

So far, our agent only returns a final answer with "done_for_now". But what if the agent needs clarification?

|

|

|

|

Let's add a new tool that allows the agent to request more information from the user.

|

|

|

|

## Why Human-in-the-Loop?

|

|

|

|

- **Handle ambiguous inputs**: When user input is unclear or contains typos

|

|

- **Request missing information**: When the agent needs more context

|

|

- **Confirm sensitive operations**: Before performing important actions

|

|

- **Interactive workflows**: Build conversational agents that engage users

|

|

|

|

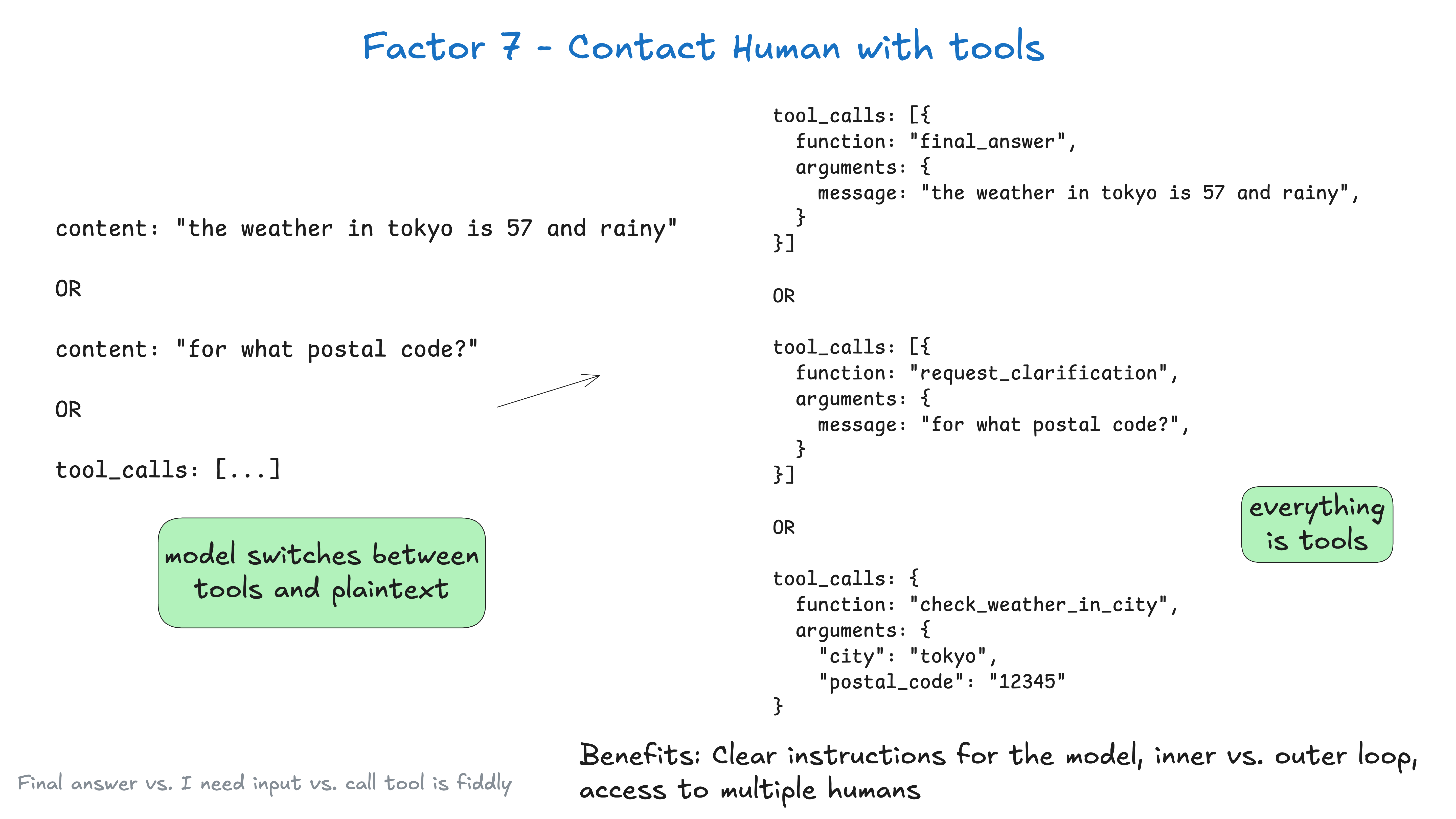

## Factor 7: Contact Humans with Tools

|

|

|

|

This is a critical pattern - treating human interaction as just another tool call!

|

|

|

|

📖 **Learn more**: [Factor 7: Contact Humans with Tools](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-07-contact-humans-with-tools.md)

|

|

|

|

|

|

|

|

This enables **outer-loop agents** - agents that can pause execution and wait for human input:

|

|

|

|

|

|

|

|

First, let's update our BAML file to include a ClarificationRequest tool:

|

|

- fetch_file: {src: ./walkthrough/05-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Now let's update our agent to handle clarification requests:

|

|

- file: {src: ./walkthrough/05-agent.py}

|

|

- text: |

|

|

Finally, let's create a main function that handles human interaction:

|

|

- file: {src: ./walkthrough/05-main.py}

|

|

- text: |

|

|

Let's test with an ambiguous input that should trigger a clarification request:

|

|

- run_main: {regenerate_baml: true, args: "can you multiply 3 and FD*(#F&&"}

|

|

- text: |

|

|

You should see:

|

|

1. The agent recognizes the input is unclear

|

|

2. It asks for clarification

|

|

3. In Colab, you'll be prompted to type a response

|

|

4. In local testing, an auto-response is provided

|

|

5. The agent continues with the clarified input

|

|

|

|

## Interactive Testing in Colab

|

|

|

|

When running in Google Colab, the `input()` function will create an interactive text box where you can type your response. Try different clarifications to see how the agent adapts!

|

|

|

|

## Key Concepts

|

|

|

|

- **Human Tools**: Special tool types that return control to the human

|

|

- **Conversation Flow**: The agent can pause execution to get human input

|

|

- **Context Preservation**: The full conversation history is maintained

|

|

- **Flexible Handling**: Different behaviors for different environments

|

|

|

|

- name: customize-prompt

|

|

title: "Chapter 6 - Customize Your Prompt with Reasoning"

|

|

text: |

|

|

In this section, we'll explore how to customize the prompt of the agent with reasoning steps.

|

|

|

|

This is core to [factor 2 - own your prompts](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-2-own-your-prompts.md)

|

|

steps:

|

|

- text: |

|

|

## Why Add Reasoning to Prompts?

|

|

|

|

Adding explicit reasoning steps to your prompts can significantly improve agent performance:

|

|

|

|

- **Better decisions**: The model thinks through problems step-by-step

|

|

- **Transparency**: You can see the model's thought process

|

|

- **Fewer errors**: Structured thinking reduces mistakes

|

|

- **Debugging**: Easier to identify where reasoning went wrong

|

|

|

|

## Factor 2: Own Your Prompts

|

|

|

|

This chapter demonstrates taking full control of your prompts - they're first-class code!

|

|

|

|

📖 **Learn more**: [Factor 2: Own Your Prompts](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-02-own-your-prompts.md)

|

|

|

|

Let's update our agent prompt to include a reasoning step:

|

|

- fetch_file: {src: ./walkthrough/06-agent.baml, dest: baml_src/agent.baml}

|

|

- text: |

|

|

Now let's test it with a simple calculation to see the reasoning in action:

|

|

- run_main: {regenerate_baml: true, args: "can you multiply 3 and 4"}

|

|

- text: |

|

|

You should notice in the BAML logs (if enabled) that the model now includes reasoning steps before deciding what to do.

|

|

|

|

## Advanced Prompt Engineering

|

|

|

|

You can enhance your prompts further by:

|

|

- Adding specific reasoning templates for different tasks

|

|

- Including examples of good reasoning

|

|

- Structuring the reasoning with numbered steps

|

|

- Adding checks for common mistakes

|

|

|

|

The key is to guide the model's thinking process while still allowing flexibility.

|

|

|

|

- name: context-window

|

|

title: "Chapter 7 - Customize Your Context Window"

|

|

text: |

|

|

In this section, we'll explore how to customize the context window of the agent.

|

|

|

|

This is core to [factor 3 - own your context window](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-3-own-your-context-window.md)

|

|

steps:

|

|

- text: |

|

|

## Context Window Serialization

|

|

|

|

How you format your conversation history can significantly impact:

|

|

- **Token usage**: Some formats are more efficient

|

|

- **Model understanding**: Clear structure helps the model

|

|

- **Debugging**: Readable formats help development

|

|

|

|

## Factor 3: Own Your Context Window

|

|

|

|

Context engineering is everything! This is one of the most important factors.

|

|

|

|

📖 **Learn more**: [Factor 3: Own Your Context Window](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-03-own-your-context-window.md)

|

|

|

|

Let's implement two serialization formats: pretty-printed JSON and XML.

|

|

- file: {src: ./walkthrough/07-agent.py}

|

|

- text: |

|

|

Now let's create a main function that can switch between formats:

|

|

- file: {src: ./walkthrough/07-main.py}

|

|

- text: |

|

|

Let's test with JSON format first:

|

|

- run_main: {regenerate_baml: true, args: "can you multiply 3 and 4, then divide the result by 2", kwargs: {use_xml: false}}

|

|

- text: |

|

|

Now let's try the same with XML format:

|

|

- run_main: {regenerate_baml: false, args: "can you multiply 3 and 4, then divide the result by 2", kwargs: {use_xml: true}}

|

|

- text: |

|

|

## XML vs JSON Trade-offs

|

|

|

|

**XML Benefits**:

|

|

- More token-efficient for nested data

|

|

- Clear hierarchy with opening/closing tags

|

|

- Better for long conversations

|

|

|

|

**JSON Benefits**:

|

|

- Familiar to most developers

|

|

- Easy to parse and debug

|

|

- Native to JavaScript/Python

|

|

|

|

Choose based on your specific needs and token constraints!

|

|

|

|

## What's Next?

|

|

|

|

In the remaining chapters (8-12), we'll build on these foundations to add:

|

|

- **API endpoints** for serving your agent

|

|

- **State persistence** with async operations

|

|

- **Human approval workflows** (Factor 8: Own Your Control Flow)

|

|

- **Email-based approvals** via HumanLayer

|

|

- **Webhook integration** for launch/pause/resume patterns (Factor 6)

|

|

|

|

Each step brings us closer to production-ready agents that can handle real-world complexity!

|

|

|

|

📖 **Further Reading**:

|

|

- [Factor 6: Launch/Pause/Resume](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-06-launch-pause-resume.md)

|

|

- [Factor 8: Own Your Control Flow](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-08-own-your-control-flow.md)

|

|

- [Factor 9: Compact Errors](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-09-compact-errors.md)

|

|

- [Factor 10: Small, Focused Agents](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-10-small-focused-agents.md)

|

|

- [Factor 11: Trigger From Anywhere](https://github.com/humanlayer/12-factor-agents/blob/main/content/factor-11-trigger-from-anywhere.md)

|