Compare commits

10 Commits

fast-api-t

...

ccxt

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

9ef0812480 | ||

|

|

05bb4d9359 | ||

|

|

f4961413bc | ||

|

|

37d3e7ec30 | ||

|

|

5466470a1e | ||

|

|

7e3dc05259 | ||

|

|

50806b9eb8 | ||

|

|

fe82bc0fe6 | ||

|

|

26381a3329 | ||

|

|

10da7af267 |

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -8,7 +8,7 @@ assignees: ''

|

||||

---

|

||||

|

||||

<!--

|

||||

IMPORTANT: Please open an issue ONLY if you find something wrong with the source code. For questions and feedback use Discord (https://jesse.trade/discord). Also make sure to give the documentation (https://docs.jesse.trade/) and FAQ (https://jesse.trade/help) a good read to eliminate the possibility of causing the problem due to wrong usage. Make sure you are using the most recent version `pip show jesse` and updated all requirements `pip install -r https://raw.githubusercontent.com/jesse-ai/jesse/master/requirements.txt`.

|

||||

IMPORTANT: Please open an issue ONLY if you find something wrong with the source code. For questions and feedback use the Forum (https://forum.jesse.trade/) or Discord (https://jesse.trade/discord). Also make sure to give the documentation (https://docs.jesse.trade/) a good read to eliminate the possibility of causing the problem due to wrong usage. Make sure you are using the most recent version `pip show jesse` and updated all requirements `pip install -r https://raw.githubusercontent.com/jesse-ai/jesse/master/requirements.txt`.

|

||||

-->

|

||||

|

||||

**Describe the bug**

|

||||

|

||||

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

2

.github/ISSUE_TEMPLATE/feature_request.md

vendored

@@ -8,7 +8,7 @@ assignees: ''

|

||||

---

|

||||

|

||||

<!---

|

||||

Make sure to check the roadmap (https://docs.jesse.trade/docs/roadmap.html) and the Trello boards linked there whether your idea is already listed and give Jesse's documentation a good read to make sure you don't request something that's already possible. If possible use Discord (https://jesse.trade/discord) to discuss your ideas with the community.

|

||||

Make sure to check the Projects tab if your idea is already listed and give Jesse's documentation a good read to make sure you don't request something that's already possible.

|

||||

-->

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

|

||||

11

.github/dependabot.yml

vendored

11

.github/dependabot.yml

vendored

@@ -1,11 +0,0 @@

|

||||

# To get started with Dependabot version updates, you'll need to specify which

|

||||

# package ecosystems to update and where the package manifests are located.

|

||||

# Please see the documentation for all configuration options:

|

||||

# https://help.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||

|

||||

version: 2

|

||||

updates:

|

||||

- package-ecosystem: "pip" # See documentation for possible values

|

||||

directory: "/" # Location of package manifests

|

||||

schedule:

|

||||

interval: "daily"

|

||||

98

.github/workflows/codeql-analysis.yml

vendored

98

.github/workflows/codeql-analysis.yml

vendored

@@ -1,98 +0,0 @@

|

||||

# For most projects, this workflow file will not need changing; you simply need

|

||||

# to commit it to your repository.

|

||||

#

|

||||

# You may wish to alter this file to override the set of languages analyzed,

|

||||

# or to provide custom queries or build logic.

|

||||

#

|

||||

# ******** NOTE ********

|

||||

# We have attempted to detect the languages in your repository. Please check

|

||||

# the `language` matrix defined below to confirm you have the correct set of

|

||||

# supported CodeQL languages.

|

||||

#

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ master ]

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches: [ master ]

|

||||

schedule:

|

||||

- cron: '0 0 1 * *'

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

actions: read

|

||||

contents: read

|

||||

security-events: write

|

||||

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

language: [ 'python' ]

|

||||

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

|

||||

# Learn more about CodeQL language support at https://git.io/codeql-language-support

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v2

|

||||

- name: Cache pip

|

||||

uses: actions/cache@v2

|

||||

with:

|

||||

path: ${{ matrix.path }}

|

||||

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-pip-

|

||||

- name: Install ta-lib

|

||||

run: |

|

||||

if ([ "$RUNNER_OS" = "macOS" ]); then

|

||||

brew install ta-lib

|

||||

fi

|

||||

if ([ "$RUNNER_OS" = "Linux" ]); then

|

||||

if [ ! -f "$GITHUB_WORKSPACE/ta-lib/src" ]; then wget http://prdownloads.sourceforge.net/ta-lib/ta-lib-0.4.0-src.tar.gz -q && tar -xzf ta-lib-0.4.0-src.tar.gz; fi

|

||||

cd ta-lib/

|

||||

./configure --prefix=/usr

|

||||

if [ ! -f "$HOME/ta-lib/src" ]; then make; fi

|

||||

sudo make install

|

||||

cd

|

||||

fi

|

||||

if ([ "$RUNNER_OS" = "Windows" ]); then

|

||||

curl -sL http://prdownloads.sourceforge.net/ta-lib/ta-lib-0.4.0-msvc.zip -o $GITHUB_WORKSPACE/ta-lib.zip --create-dirs && 7z x $GITHUB_WORKSPACE/ta-lib.zip -o/c/ta-lib && mv /c/ta-lib/ta-lib/* /c/ta-lib/ && rm -rf /c/ta-lib/ta-lib && cd /c/ta-lib/c/make/cdr/win32/msvc && nmake

|

||||

fi

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

||||

pip install numba

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v1

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

# By default, queries listed here will override any specified in a config file.

|

||||

# Prefix the list here with "+" to use these queries and those in the config file.

|

||||

# queries: ./path/to/local/query, your-org/your-repo/queries@main

|

||||

|

||||

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

|

||||

# If this step fails, then you should remove it and run the build manually (see below)

|

||||

- name: Autobuild

|

||||

uses: github/codeql-action/autobuild@v1

|

||||

|

||||

# ℹ️ Command-line programs to run using the OS shell.

|

||||

# 📚 https://git.io/JvXDl

|

||||

|

||||

# ✏️ If the Autobuild fails above, remove it and uncomment the following three lines

|

||||

# and modify them (or add more) to build your code if your project

|

||||

# uses a compiled language

|

||||

|

||||

#- run: |

|

||||

# make bootstrap

|

||||

# make release

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v1

|

||||

81

.github/workflows/python-package.yml

vendored

81

.github/workflows/python-package.yml

vendored

@@ -1,81 +0,0 @@

|

||||

# This workflow will install Python dependencies, run tests and lint with a single version of Python

|

||||

# For more information see: https://help.github.com/actions/language-and-framework-guides/using-python-with-github-actions

|

||||

|

||||

name: Python application

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ master ]

|

||||

pull_request:

|

||||

branches: [ master ]

|

||||

|

||||

concurrency:

|

||||

group: ${{ github.workflow }}-${{ github.ref }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

|

||||

runs-on: ${{matrix.os}}

|

||||

strategy:

|

||||

matrix:

|

||||

# os: [ubuntu-latest, macos-latest, windows-latest]

|

||||

os: [ubuntu-latest]

|

||||

include:

|

||||

- os: ubuntu-latest

|

||||

path: ~/.cache/pip

|

||||

#- os: macos-latest

|

||||

# path: ~/Library/Caches/pip

|

||||

#- os: windows-latest

|

||||

# path: ~\AppData\Local\pip\Cache

|

||||

python-version: [3.7, 3.8, 3.9]

|

||||

|

||||

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v2

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v2

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Cache pip

|

||||

uses: actions/cache@v2

|

||||

with:

|

||||

path: ${{ matrix.path }}

|

||||

key: ${{ runner.os }}-pip-${{ hashFiles('**/requirements.txt') }}

|

||||

restore-keys: |

|

||||

${{ runner.os }}-pip-

|

||||

- name: Install ta-lib

|

||||

run: |

|

||||

if ([ "$RUNNER_OS" = "macOS" ]); then

|

||||

brew install ta-lib

|

||||

fi

|

||||

if ([ "$RUNNER_OS" = "Linux" ]); then

|

||||

if [ ! -f "$GITHUB_WORKSPACE/ta-lib/src" ]; then wget http://prdownloads.sourceforge.net/ta-lib/ta-lib-0.4.0-src.tar.gz -q && tar -xzf ta-lib-0.4.0-src.tar.gz; fi

|

||||

cd ta-lib/

|

||||

./configure --prefix=/usr

|

||||

if [ ! -f "$HOME/ta-lib/src" ]; then make; fi

|

||||

sudo make install

|

||||

cd

|

||||

fi

|

||||

if ([ "$RUNNER_OS" = "Windows" ]); then

|

||||

curl -sL http://prdownloads.sourceforge.net/ta-lib/ta-lib-0.4.0-msvc.zip -o $GITHUB_WORKSPACE/ta-lib.zip --create-dirs && 7z x $GITHUB_WORKSPACE/ta-lib.zip -o/c/ta-lib && mv /c/ta-lib/ta-lib/* /c/ta-lib/ && rm -rf /c/ta-lib/ta-lib && cd /c/ta-lib/c/make/cdr/win32/msvc && nmake

|

||||

fi

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

if [ -f requirements.txt ]; then pip install -r requirements.txt; fi

|

||||

pip install numba

|

||||

# - name: Lint with flake8

|

||||

# run: |

|

||||

# stop the build if there are Python syntax errors or undefined names

|

||||

# flake8 . --count --select=E9,F63,F7,F82 --show-source --statistics

|

||||

# exit-zero treats all errors as warnings. The GitHub editor is 127 chars wide

|

||||

# flake8 . --count --exit-zero --max-complexity=10 --max-line-length=127 --statistics

|

||||

- name: Install jesse

|

||||

run: |

|

||||

pip install -e . -U

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

pip install pytest

|

||||

pytest

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -147,5 +147,4 @@ cython_debug/

|

||||

/storage/*.gz

|

||||

/.vagrant

|

||||

testing-*.py

|

||||

/storage/full-reports/*.html

|

||||

/storage/logs/*

|

||||

/storage/full-reports/*.html

|

||||

@@ -2,7 +2,7 @@ FROM python:3.9.4-slim

|

||||

ENV PYTHONUNBUFFERED 1

|

||||

|

||||

RUN apt-get update \

|

||||

&& apt-get -y install git build-essential libssl-dev \

|

||||

&& apt-get -y install build-essential libssl-dev \

|

||||

&& apt-get clean \

|

||||

&& pip install --upgrade pip

|

||||

|

||||

|

||||

239

README.md

239

README.md

@@ -1,8 +1,94 @@

|

||||

# Jesse beta (GUI dashboard)

|

||||

# Jesse

|

||||

[](https://pypi.org/project/jesse)

|

||||

[](https://pepy.tech/project/jesse)

|

||||

[](https://hub.docker.com/r/salehmir/jesse)

|

||||

[](https://github.com/jesse-ai/jesse)

|

||||

|

||||

Here's a quick guide on how to set up and run the dashboard branch until it is officially released.

|

||||

---

|

||||

|

||||

[](https://jesse.trade)

|

||||

[](https://docs.jesse.trade)

|

||||

[](https://jesse.trade/help)

|

||||

[](https://jesse.trade/discord)

|

||||

[](https://jesse.trade/blog)

|

||||

---

|

||||

Jesse is an advanced crypto trading framework which aims to simplify researching and defining trading strategies.

|

||||

|

||||

## Why Jesse?

|

||||

In short, Jesse is more accurate than other solutions, and way more simple.

|

||||

In fact, it is so simple that in case you already know Python, you can get started today, in matter of minutes, instead of weeks and months.

|

||||

|

||||

[Here](https://docs.jesse.trade/docs/) you can read more about why Jesse's features.

|

||||

|

||||

## Getting Started

|

||||

Head over to the "getting started" section of the [documentation](https://docs.jesse.trade/docs/getting-started). The

|

||||

documentation is short yet very informative.

|

||||

|

||||

## Example Backtest Results

|

||||

|

||||

Check out Jesse's [blog](https://jesse.trade/blog) for tutorials that go through example strategies step by step.

|

||||

|

||||

Here's an example output for a backtest simulation just to get you excited:

|

||||

```

|

||||

CANDLES |

|

||||

----------------------+--------------------------

|

||||

period | 1792 days (4.91 years)

|

||||

starting-ending date | 2016-01-01 => 2020-11-27

|

||||

|

||||

|

||||

exchange | symbol | timeframe | strategy | DNA

|

||||

------------+----------+-------------+------------------+-------

|

||||

Bitfinex | BTC-USD | 6h | TrendFollowing05 |

|

||||

|

||||

|

||||

Executing simulation... [####################################] 100%

|

||||

Executed backtest simulation in: 135.85 seconds

|

||||

|

||||

|

||||

METRICS |

|

||||

---------------------------------+------------------------------------

|

||||

Total Closed Trades | 221

|

||||

Total Net Profit | 1,699,245.56 (1699.25%)

|

||||

Starting => Finishing Balance | 100,000 => 1,799,245.56

|

||||

Total Open Trades | 0

|

||||

Open PL | 0

|

||||

Total Paid Fees | 331,480.93

|

||||

Max Drawdown | -22.42%

|

||||

Annual Return | 80.09%

|

||||

Expectancy | 7,688.89 (7.69%)

|

||||

Avg Win | Avg Loss | 31,021.9 | 8,951.7

|

||||

Ratio Avg Win / Avg Loss | 3.47

|

||||

Percent Profitable | 42%

|

||||

Longs | Shorts | 60% | 40%

|

||||

Avg Holding Time | 3.0 days, 22.0 hours, 50.0 minutes

|

||||

Winning Trades Avg Holding Time | 6.0 days, 14.0 hours, 9.0 minutes

|

||||

Losing Trades Avg Holding Time | 2.0 days, 1.0 hour, 41.0 minutes

|

||||

Sharpe Ratio | 1.88

|

||||

Calmar Ratio | 3.57

|

||||

Sortino Ratio | 3.51

|

||||

Omega Ratio | 1.49

|

||||

Winning Streak | 5

|

||||

Losing Streak | 10

|

||||

Largest Winning Trade | 205,575.89

|

||||

Largest Losing Trade | -50,827.92

|

||||

Total Winning Trades | 92

|

||||

Total Losing Trades | 129

|

||||

```

|

||||

|

||||

And here are generated charts:

|

||||

|

||||

|

||||

## What's next?

|

||||

This is the very initial release. There's way more. Subscribe to our mailing list at [jesse.trade](https://jesse.trade) to get the good stuff as soon they're released. Don't worry, We won't send you spam. Pinky promise.

|

||||

|

||||

## Community

|

||||

I created a [discord server](https://jesse.trade/discord) for Jesse users to discuss algo-trading. It's a warm place to share ideas, and help each other out.

|

||||

|

||||

## How to contribute

|

||||

Thank you for your interest in contributing to the project. Before starting to work on a PR, please make sure that it isn't under the "in progress" column in our [Github project page](https://github.com/jesse-ai/jesse/projects/2). In case you want to help but don't know what tasks we need help for, checkout the "todo" column.

|

||||

|

||||

First, you need to install Jesse from the repository instead of PyPi:

|

||||

|

||||

First, you need to set up Jesse from the source code if you haven't already:

|

||||

```sh

|

||||

# first, make sure that the PyPi version is not installed

|

||||

pip uninstall jesse

|

||||

@@ -13,149 +99,12 @@ cd jesse

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

Then you need to switch to the `beta` branch:

|

||||

```sh

|

||||

git checkout beta

|

||||

Now every change you make to the code will be affected immediately.

|

||||

|

||||

After every change, make sure your changes did not break any functionality by running tests:

|

||||

```

|

||||

|

||||

Now go to your Jesse project (where you used to run backtest command, etc) and first create a `.env` file with the below configuration:

|

||||

|

||||

```sh

|

||||

nano .env

|

||||

```

|

||||

|

||||

```

|

||||

PASSWORD=test

|

||||

|

||||

POSTGRES_HOST=127.0.0.1

|

||||

POSTGRES_NAME=jesse_db

|

||||

POSTGRES_PORT=5432

|

||||

POSTGRES_USERNAME=jesse_user

|

||||

POSTGRES_PASSWORD=password

|

||||

|

||||

REDIS_HOST=localhost

|

||||

REDIS_PORT=6379

|

||||

REDIS_PASSWORD=

|

||||

|

||||

|

||||

# # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # #

|

||||

# Live Trade Only #

|

||||

# =============================================================================== #

|

||||

# Below values don't concern you if you haven't installed the live trade plugin #

|

||||

# # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # # #

|

||||

|

||||

# For all notifications

|

||||

GENERAL_TELEGRAM_BOT_TOKEN=

|

||||

GENERAL_TELEGRAM_BOT_CHAT_ID=

|

||||

GENERAL_DISCORD_WEBHOOK=

|

||||

|

||||

# For error notifications only

|

||||

ERROR_TELEGRAM_BOT_TOKEN=

|

||||

ERROR_TELEGRAM_BOT_CHAT_ID=

|

||||

ERROR_DISCORD_WEBHOOK=

|

||||

|

||||

# Testnet Binance Futures:

|

||||

# http://testnet.binancefuture.com

|

||||

TESTNET_BINANCE_FUTURES_API_KEY=

|

||||

TESTNET_BINANCE_FUTURES_API_SECRET=

|

||||

|

||||

# Binance Futures:

|

||||

# https://www.binance.com/en/futures/btcusdt

|

||||

BINANCE_FUTURES_API_KEY=

|

||||

BINANCE_FUTURES_API_SECRET=

|

||||

|

||||

# FTX Futures:

|

||||

# https://ftx.com/markets/future

|

||||

FTX_FUTURES_API_KEY=

|

||||

FTX_FUTURES_API_SECRET=

|

||||

# leave empty if it's the main account and not a subaccount

|

||||

FTX_FUTURES_SUBACCOUNT_NAME=

|

||||

```

|

||||

|

||||

Of course, you should change the values to your config if you're not using the default values. Also, don't forget to change the `PASSWORD` as you need it for logging in. You no longer need `routes.py` and `config.py`, or even `live-config.py` files in your Jesse project. You can delete them if you want.

|

||||

|

||||

## New Requirements

|

||||

First, install Redis which is a requirement for this application. I will add guides for different environments but for now, you should be able to find guides on the net. On a mac, it's as easy as running `brew install redis`. On Ubuntu 20.04:

|

||||

|

||||

```sh

|

||||

sudo apt update -y

|

||||

sudo apt install redis-server -y

|

||||

# The supervised directive is set to no by default. So let's edit it:

|

||||

sudo nano /etc/redis/redis.conf

|

||||

# Find the line that says `supervised no` and change it to `supervised systemd`

|

||||

sudo systemctl restart redis.service

|

||||

```

|

||||

|

||||

Then you need to install few pip packages as well. A quick way to install them all is by running:

|

||||

```sh

|

||||

pip install -r https://raw.githubusercontent.com/jesse-ai/jesse/beta/requirements.txt

|

||||

```

|

||||

|

||||

## Start the application

|

||||

|

||||

To get the party started, (inside your Jesse project) run the application by:

|

||||

```

|

||||

jesse run

|

||||

```

|

||||

|

||||

And it will print a local URL for you to open in your browser such as:

|

||||

```

|

||||

INFO: Started server process [66103]

|

||||

INFO: Waiting for application startup.

|

||||

INFO: Application startup complete.

|

||||

INFO: Uvicorn running on http://0.0.0.0:9000 (Press CTRL+C to quit)

|

||||

```

|

||||

|

||||

So go ahead and open (in my case) `http://127.0.0.1:9000` in your browser of choice. If you are running on a server, you can use the IP address of the server instead of

|

||||

`127.0.0.1`. So for example if the IP address of your server is `1.2.3.4` the URL would be `http://1.2.3.4:9000`. I will soon add instructions on how to secure the remote server that is running the application.

|

||||

|

||||

## Live Trade Plugin

|

||||

To install the beta version of the live trade plugin, first, make sure to uninstall the previous one:

|

||||

```

|

||||

pip uninstall jesse-live

|

||||

```

|

||||

|

||||

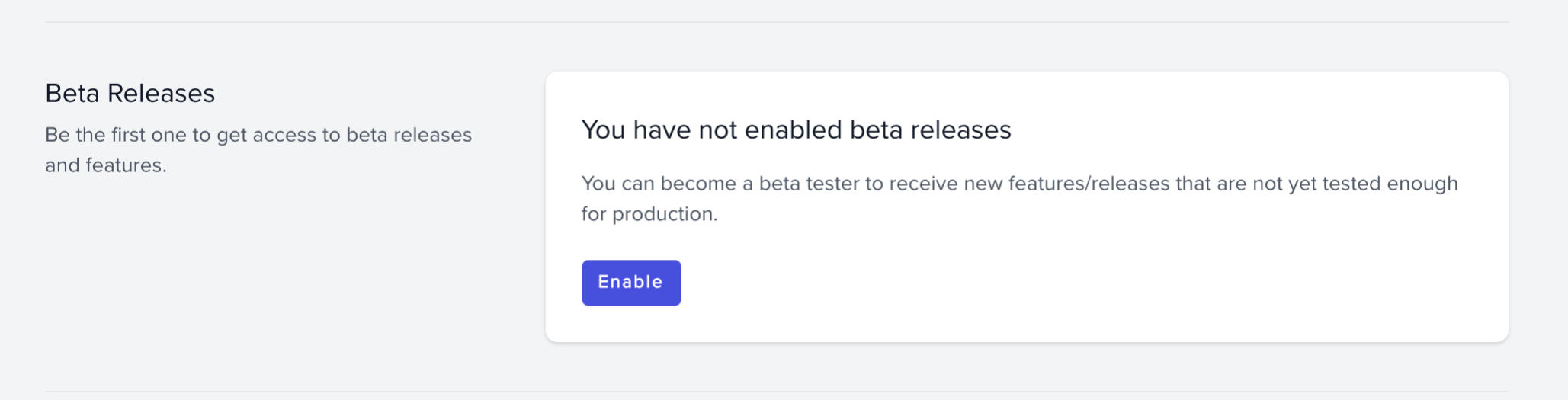

Now you need to change your account on Jesse.Trade as a beta user. You'll find it at your [profile](https://jesse.trade/user/profile) page:

|

||||

|

||||

|

||||

|

||||

Now you can see the latest beta version on the [releases](http://jesse.trade/releases) page. Download and install it as always.

|

||||

|

||||

## Security

|

||||

In case you are running the application on a remote server, you should secure the server. I will mention two methods here, but of course security is a big topic but I think these two methods are enough.

|

||||

|

||||

### 1. Password

|

||||

Change the password (`PASSWORD`) in your `.env` file. Make sure to set it to something secure.

|

||||

|

||||

### 2. Firewall

|

||||

The dashboard is supposed to be accessible only by you. That makes it easy to secure. So the best way is to just close all incoming ports except

|

||||

for the ones you need. But open them **only for your trusted IP addresses**. This can be done via both a firewall from within the server or the firewall that your cloud provider provides (Hetzner, DigitalOcean, etc).

|

||||

|

||||

I will show you how to do it via ufw which is a popular firewall that comes with Ubuntu 20.04:

|

||||

|

||||

```sh

|

||||

ufw status

|

||||

# if it's active, stop it:

|

||||

systemctl stop ufw

|

||||

# allow all outgoing traffic

|

||||

ufw default allow outgoing

|

||||

# deny all incoming traffic

|

||||

ufw default deny incoming

|

||||

# allow ssh port (22)

|

||||

ufw allow ssh

|

||||

# If you don't have specific IP addresses, you can open the targeted port

|

||||

# (9000 by default) for all, but it's best to allow specific IP addre~~sses only.

|

||||

# Assuming your IP addresses are 1.1.1.1, 1.1.1.2, and 1.1.1.3, run:

|

||||

ufw allow from 1.1.1.1 to any port 9000 proto tcp

|

||||

ufw allow from 1.1.1.2 to any port 9000 proto tcp

|

||||

ufw allow from 1.1.1.3 to any port 9000 proto tcp

|

||||

# enable the firewall

|

||||

ufw enable

|

||||

# check the status

|

||||

ufw status numbered

|

||||

# restart ufw to apply the changes

|

||||

systemctl restart ufw

|

||||

pytest

|

||||

```

|

||||

|

||||

## Disclaimer

|

||||

**This is version is the beta version of an early-access plugin! That means you should NOT use it in production yet! If done otherwise, only you are responsible.**

|

||||

This software is for educational purposes only. USE THE SOFTWARE AT YOUR OWN RISK. THE AUTHORS AND ALL AFFILIATES ASSUME NO RESPONSIBILITY FOR YOUR TRADING RESULTS. Do not risk money which you are afraid to lose. There might be bugs in the code - this software DOES NOT come with ANY warranty.

|

||||

|

||||

@@ -1,41 +1,37 @@

|

||||

import asyncio

|

||||

import json

|

||||

import os

|

||||

import sys

|

||||

# Hide the "FutureWarning: pandas.util.testing is deprecated." caused by empyrical

|

||||

import warnings

|

||||

from typing import Optional

|

||||

from pydoc import locate

|

||||

|

||||

import click

|

||||

import pkg_resources

|

||||

from fastapi import BackgroundTasks, Query, Header

|

||||

from starlette.websockets import WebSocket, WebSocketDisconnect

|

||||

from fastapi.responses import JSONResponse, FileResponse

|

||||

from fastapi.staticfiles import StaticFiles

|

||||

from jesse.services import auth as authenticator

|

||||

from jesse.services.redis import async_redis, async_publish, sync_publish

|

||||

from jesse.services.web import fastapi_app, BacktestRequestJson, ImportCandlesRequestJson, CancelRequestJson, \

|

||||

LoginRequestJson, ConfigRequestJson, LoginJesseTradeRequestJson, NewStrategyRequestJson, FeedbackRequestJson, \

|

||||

ReportExceptionRequestJson, OptimizationRequestJson

|

||||

import uvicorn

|

||||

from asyncio import Queue

|

||||

import jesse.helpers as jh

|

||||

import time

|

||||

|

||||

# to silent stupid pandas warnings

|

||||

import jesse.helpers as jh

|

||||

|

||||

warnings.simplefilter(action='ignore', category=FutureWarning)

|

||||

|

||||

# Python version validation.

|

||||

if jh.python_version() < 3.7:

|

||||

print(

|

||||

jh.color(

|

||||

f'Jesse requires Python version above 3.7. Yours is {jh.python_version()}',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

|

||||

# variable to know if the live trade plugin is installed

|

||||

HAS_LIVE_TRADE_PLUGIN = True

|

||||

try:

|

||||

import jesse_live

|

||||

except ModuleNotFoundError:

|

||||

HAS_LIVE_TRADE_PLUGIN = False

|

||||

# fix directory issue

|

||||

sys.path.insert(0, os.getcwd())

|

||||

|

||||

ls = os.listdir('.')

|

||||

is_jesse_project = 'strategies' in ls and 'config.py' in ls and 'storage' in ls and 'routes.py' in ls

|

||||

|

||||

|

||||

def validate_cwd() -> None:

|

||||

"""

|

||||

make sure we're in a Jesse project

|

||||

"""

|

||||

if not jh.is_jesse_project():

|

||||

if not is_jesse_project:

|

||||

print(

|

||||

jh.color(

|

||||

'Current directory is not a Jesse project. You must run commands from the root of a Jesse project.',

|

||||

@@ -45,130 +41,188 @@ def validate_cwd() -> None:

|

||||

os._exit(1)

|

||||

|

||||

|

||||

# print(os.path.dirname(jesse))

|

||||

JESSE_DIR = os.path.dirname(os.path.realpath(__file__))

|

||||

def inject_local_config() -> None:

|

||||

"""

|

||||

injects config from local config file

|

||||

"""

|

||||

local_config = locate('config.config')

|

||||

from jesse.config import set_config

|

||||

set_config(local_config)

|

||||

|

||||

|

||||

# load homepage

|

||||

@fastapi_app.get("/")

|

||||

async def index():

|

||||

return FileResponse(f"{JESSE_DIR}/static/index.html")

|

||||

def inject_local_routes() -> None:

|

||||

"""

|

||||

injects routes from local routes folder

|

||||

"""

|

||||

local_router = locate('routes')

|

||||

from jesse.routes import router

|

||||

|

||||

router.set_routes(local_router.routes)

|

||||

router.set_extra_candles(local_router.extra_candles)

|

||||

|

||||

|

||||

@fastapi_app.post("/terminate-all")

|

||||

async def terminate_all(authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

process_manager.flush()

|

||||

return JSONResponse({'message': 'terminating all tasks...'})

|

||||

# inject local files

|

||||

if is_jesse_project:

|

||||

inject_local_config()

|

||||

inject_local_routes()

|

||||

|

||||

|

||||

@fastapi_app.post("/shutdown")

|

||||

async def shutdown(background_tasks: BackgroundTasks, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

def register_custom_exception_handler() -> None:

|

||||

import sys

|

||||

import threading

|

||||

import traceback

|

||||

import logging

|

||||

from jesse.services import logger as jesse_logger

|

||||

import click

|

||||

from jesse import exceptions

|

||||

|

||||

background_tasks.add_task(jh.terminate_app)

|

||||

return JSONResponse({'message': 'Shutting down...'})

|

||||

log_format = "%(message)s"

|

||||

os.makedirs('storage/logs', exist_ok=True)

|

||||

|

||||

if jh.is_livetrading():

|

||||

logging.basicConfig(filename='storage/logs/live-trade.txt', level=logging.INFO,

|

||||

filemode='w', format=log_format)

|

||||

elif jh.is_paper_trading():

|

||||

logging.basicConfig(filename='storage/logs/paper-trade.txt', level=logging.INFO,

|

||||

filemode='w',

|

||||

format=log_format)

|

||||

elif jh.is_collecting_data():

|

||||

logging.basicConfig(filename='storage/logs/collect.txt', level=logging.INFO, filemode='w',

|

||||

format=log_format)

|

||||

elif jh.is_optimizing():

|

||||

logging.basicConfig(filename='storage/logs/optimize.txt', level=logging.INFO, filemode='w',

|

||||

format=log_format)

|

||||

else:

|

||||

logging.basicConfig(level=logging.INFO)

|

||||

|

||||

@fastapi_app.post("/auth")

|

||||

def auth(json_request: LoginRequestJson):

|

||||

return authenticator.password_to_token(json_request.password)

|

||||

# main thread

|

||||

def handle_exception(exc_type, exc_value, exc_traceback) -> None:

|

||||

if issubclass(exc_type, KeyboardInterrupt):

|

||||

sys.excepthook(exc_type, exc_value, exc_traceback)

|

||||

return

|

||||

|

||||

# handle Breaking exceptions

|

||||

if exc_type in [

|

||||

exceptions.InvalidConfig, exceptions.RouteNotFound, exceptions.InvalidRoutes,

|

||||

exceptions.CandleNotFoundInDatabase

|

||||

]:

|

||||

click.clear()

|

||||

print(f"{'=' * 30} EXCEPTION TRACEBACK:")

|

||||

traceback.print_tb(exc_traceback, file=sys.stdout)

|

||||

print("=" * 73)

|

||||

print(

|

||||

'\n',

|

||||

jh.color('Uncaught Exception:', 'red'),

|

||||

jh.color(f'{exc_type.__name__}: {exc_value}', 'yellow')

|

||||

)

|

||||

return

|

||||

|

||||

@fastapi_app.post("/make-strategy")

|

||||

def make_strategy(json_request: NewStrategyRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services import strategy_maker

|

||||

return strategy_maker.generate(json_request.name)

|

||||

|

||||

|

||||

@fastapi_app.post("/feedback")

|

||||

def feedback(json_request: FeedbackRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services import jesse_trade

|

||||

return jesse_trade.feedback(json_request.description, json_request.email)

|

||||

|

||||

|

||||

@fastapi_app.post("/report-exception")

|

||||

def report_exception(json_request: ReportExceptionRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services import jesse_trade

|

||||

return jesse_trade.report_exception(

|

||||

json_request.description, json_request.traceback, json_request.mode, json_request.attach_logs, json_request.session_id, json_request.email

|

||||

)

|

||||

|

||||

|

||||

@fastapi_app.post("/get-config")

|

||||

def get_config(json_request: ConfigRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.modes.data_provider import get_config as gc

|

||||

|

||||

return JSONResponse({

|

||||

'data': gc(json_request.current_config, has_live=HAS_LIVE_TRADE_PLUGIN)

|

||||

}, status_code=200)

|

||||

|

||||

|

||||

@fastapi_app.post("/update-config")

|

||||

def update_config(json_request: ConfigRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.modes.data_provider import update_config as uc

|

||||

|

||||

uc(json_request.current_config)

|

||||

|

||||

return JSONResponse({'message': 'Updated configurations successfully'}, status_code=200)

|

||||

|

||||

|

||||

@fastapi_app.websocket("/ws")

|

||||

async def websocket_endpoint(websocket: WebSocket, token: str = Query(...)):

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

if not authenticator.is_valid_token(token):

|

||||

return

|

||||

|

||||

await websocket.accept()

|

||||

|

||||

queue = Queue()

|

||||

ch, = await async_redis.psubscribe('channel:*')

|

||||

|

||||

async def echo(q):

|

||||

while True:

|

||||

msg = await q.get()

|

||||

msg = json.loads(msg)

|

||||

msg['id'] = process_manager.get_client_id(msg['id'])

|

||||

await websocket.send_json(

|

||||

msg

|

||||

# send notifications if it's a live session

|

||||

if jh.is_live():

|

||||

jesse_logger.error(

|

||||

f'{exc_type.__name__}: {exc_value}'

|

||||

)

|

||||

|

||||

async def reader(channel, q):

|

||||

async for ch, message in channel.iter():

|

||||

# modify id and set the one that the font-end knows

|

||||

await q.put(message)

|

||||

if jh.is_live() or jh.is_collecting_data():

|

||||

logging.error("Uncaught Exception:", exc_info=(exc_type, exc_value, exc_traceback))

|

||||

else:

|

||||

print(f"{'=' * 30} EXCEPTION TRACEBACK:")

|

||||

traceback.print_tb(exc_traceback, file=sys.stdout)

|

||||

print("=" * 73)

|

||||

print(

|

||||

'\n',

|

||||

jh.color('Uncaught Exception:', 'red'),

|

||||

jh.color(f'{exc_type.__name__}: {exc_value}', 'yellow')

|

||||

)

|

||||

|

||||

asyncio.get_running_loop().create_task(reader(ch, queue))

|

||||

asyncio.get_running_loop().create_task(echo(queue))

|

||||

if jh.is_paper_trading():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/paper-trade.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

elif jh.is_livetrading():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/live-trade.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

elif jh.is_collecting_data():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/collect.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

|

||||

try:

|

||||

while True:

|

||||

# just so WebSocketDisconnect would be raised on connection close

|

||||

await websocket.receive_text()

|

||||

except WebSocketDisconnect:

|

||||

await async_redis.punsubscribe('channel:*')

|

||||

print('Websocket disconnected')

|

||||

sys.excepthook = handle_exception

|

||||

|

||||

# other threads

|

||||

if jh.python_version() >= 3.8:

|

||||

def handle_thread_exception(args) -> None:

|

||||

if args.exc_type == SystemExit:

|

||||

return

|

||||

|

||||

# handle Breaking exceptions

|

||||

if args.exc_type in [

|

||||

exceptions.InvalidConfig, exceptions.RouteNotFound, exceptions.InvalidRoutes,

|

||||

exceptions.CandleNotFoundInDatabase

|

||||

]:

|

||||

click.clear()

|

||||

print(f"{'=' * 30} EXCEPTION TRACEBACK:")

|

||||

traceback.print_tb(args.exc_traceback, file=sys.stdout)

|

||||

print("=" * 73)

|

||||

print(

|

||||

'\n',

|

||||

jh.color('Uncaught Exception:', 'red'),

|

||||

jh.color(f'{args.exc_type.__name__}: {args.exc_value}', 'yellow')

|

||||

)

|

||||

return

|

||||

|

||||

# send notifications if it's a live session

|

||||

if jh.is_live():

|

||||

jesse_logger.error(

|

||||

f'{args.exc_type.__name__}: { args.exc_value}'

|

||||

)

|

||||

|

||||

if jh.is_live() or jh.is_collecting_data():

|

||||

logging.error("Uncaught Exception:",

|

||||

exc_info=(args.exc_type, args.exc_value, args.exc_traceback))

|

||||

else:

|

||||

print(f"{'=' * 30} EXCEPTION TRACEBACK:")

|

||||

traceback.print_tb(args.exc_traceback, file=sys.stdout)

|

||||

print("=" * 73)

|

||||

print(

|

||||

'\n',

|

||||

jh.color('Uncaught Exception:', 'red'),

|

||||

jh.color(f'{args.exc_type.__name__}: {args.exc_value}', 'yellow')

|

||||

)

|

||||

|

||||

if jh.is_paper_trading():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/paper-trade.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

elif jh.is_livetrading():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/live-trade.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

elif jh.is_collecting_data():

|

||||

print(

|

||||

jh.color(

|

||||

'An uncaught exception was raised. Check the log file at:\nstorage/logs/collect.txt',

|

||||

'red'

|

||||

)

|

||||

)

|

||||

|

||||

threading.excepthook = handle_thread_exception

|

||||

|

||||

|

||||

# create a Click group

|

||||

@@ -179,294 +233,275 @@ def cli() -> None:

|

||||

|

||||

|

||||

@cli.command()

|

||||

@click.option(

|

||||

'--strict/--no-strict', default=True,

|

||||

help='Default is the strict mode which will raise an exception if the values for license is not set.'

|

||||

)

|

||||

def install_live(strict: bool) -> None:

|

||||

from jesse.services.installer import install

|

||||

install(HAS_LIVE_TRADE_PLUGIN, strict)

|

||||

|

||||

|

||||

@cli.command()

|

||||

def run() -> None:

|

||||

@click.argument('exchange', required=True, type=str)

|

||||

@click.argument('symbol', required=True, type=str)

|

||||

@click.argument('start_date', required=True, type=str)

|

||||

@click.option('--skip-confirmation', is_flag=True,

|

||||

help="Will prevent confirmation for skipping duplicates")

|

||||

def import_candles(exchange: str, symbol: str, start_date: str, skip_confirmation: bool) -> None:

|

||||

"""

|

||||

imports historical candles from exchange

|

||||

"""

|

||||

validate_cwd()

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'import-candles'

|

||||

|

||||

# run all the db migrations

|

||||

from jesse.services.migrator import run as run_migrations

|

||||

import peewee

|

||||

try:

|

||||

run_migrations()

|

||||

except peewee.OperationalError:

|

||||

sleep_seconds = 10

|

||||

print(f"Database wasn't ready. Sleep for {sleep_seconds} seconds and try again.")

|

||||

time.sleep(sleep_seconds)

|

||||

run_migrations()

|

||||

register_custom_exception_handler()

|

||||

|

||||

# read port from .env file, if not found, use default

|

||||

from jesse.services.env import ENV_VALUES

|

||||

if 'APP_PORT' in ENV_VALUES:

|

||||

port = int(ENV_VALUES['APP_PORT'])

|

||||

else:

|

||||

port = 9000

|

||||

|

||||

# run the main application

|

||||

uvicorn.run(fastapi_app, host="0.0.0.0", port=port, log_level="info")

|

||||

|

||||

|

||||

@fastapi_app.post('/general-info')

|

||||

def general_info(authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.modes import data_provider

|

||||

|

||||

try:

|

||||

data = data_provider.get_general_info(has_live=HAS_LIVE_TRADE_PLUGIN)

|

||||

except Exception as e:

|

||||

return JSONResponse({

|

||||

'error': str(e)

|

||||

}, status_code=500)

|

||||

|

||||

return JSONResponse(

|

||||

data,

|

||||

status_code=200

|

||||

)

|

||||

|

||||

|

||||

@fastapi_app.post('/import-candles')

|

||||

def import_candles(request_json: ImportCandlesRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

validate_cwd()

|

||||

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

from jesse.services import db

|

||||

|

||||

from jesse.modes import import_candles_mode

|

||||

|

||||

process_manager.add_task(

|

||||

import_candles_mode.run, 'candles-' + str(request_json.id), request_json.exchange, request_json.symbol,

|

||||

request_json.start_date, True

|

||||

)

|

||||

import_candles_mode.run(exchange, symbol, start_date, skip_confirmation)

|

||||

|

||||

return JSONResponse({'message': 'Started importing candles...'}, status_code=202)

|

||||

db.close_connection()

|

||||

|

||||

|

||||

@fastapi_app.delete("/import-candles")

|

||||

def cancel_import_candles(request_json: CancelRequestJson, authorization: Optional[str] = Header(None)):

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

process_manager.cancel_process('candles-' + request_json.id)

|

||||

|

||||

return JSONResponse({'message': f'Candles process with ID of {request_json.id} was requested for termination'}, status_code=202)

|

||||

|

||||

|

||||

@fastapi_app.post("/backtest")

|

||||

def backtest(request_json: BacktestRequestJson, authorization: Optional[str] = Header(None)):

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

@cli.command()

|

||||

@click.argument('start_date', required=True, type=str)

|

||||

@click.argument('finish_date', required=True, type=str)

|

||||

@click.option('--debug/--no-debug', default=False,

|

||||

help='Displays logging messages instead of the progressbar. Used for debugging your strategy.')

|

||||

@click.option('--csv/--no-csv', default=False,

|

||||

help='Outputs a CSV file of all executed trades on completion.')

|

||||

@click.option('--json/--no-json', default=False,

|

||||

help='Outputs a JSON file of all executed trades on completion.')

|

||||

@click.option('--fee/--no-fee', default=True,

|

||||

help='You can use "--no-fee" as a quick way to set trading fee to zero.')

|

||||

@click.option('--chart/--no-chart', default=False,

|

||||

help='Generates charts of daily portfolio balance and assets price change. Useful for a visual comparision of your portfolio against the market.')

|

||||

@click.option('--tradingview/--no-tradingview', default=False,

|

||||

help="Generates an output that can be copy-and-pasted into tradingview.com's pine-editor too see the trades in their charts.")

|

||||

@click.option('--full-reports/--no-full-reports', default=False,

|

||||

help="Generates QuantStats' HTML output with metrics reports like Sharpe ratio, Win rate, Volatility, etc., and batch plotting for visualizing performance, drawdowns, rolling statistics, monthly returns, etc.")

|

||||

def backtest(start_date: str, finish_date: str, debug: bool, csv: bool, json: bool, fee: bool, chart: bool,

|

||||

tradingview: bool, full_reports: bool) -> None:

|

||||

"""

|

||||

backtest mode. Enter in "YYYY-MM-DD" "YYYY-MM-DD"

|

||||

"""

|

||||

validate_cwd()

|

||||

|

||||

from jesse.modes.backtest_mode import run as run_backtest

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'backtest'

|

||||

|

||||

process_manager.add_task(

|

||||

run_backtest,

|

||||

'backtest-' + str(request_json.id),

|

||||

request_json.debug_mode,

|

||||

request_json.config,

|

||||

request_json.routes,

|

||||

request_json.extra_routes,

|

||||

request_json.start_date,

|

||||

request_json.finish_date,

|

||||

None,

|

||||

request_json.export_chart,

|

||||

request_json.export_tradingview,

|

||||

request_json.export_full_reports,

|

||||

request_json.export_csv,

|

||||

request_json.export_json

|

||||

)

|

||||

register_custom_exception_handler()

|

||||

|

||||

return JSONResponse({'message': 'Started backtesting...'}, status_code=202)

|

||||

from jesse.services import db

|

||||

from jesse.modes import backtest_mode

|

||||

from jesse.services.selectors import get_exchange

|

||||

|

||||

# debug flag

|

||||

config['app']['debug_mode'] = debug

|

||||

|

||||

# fee flag

|

||||

if not fee:

|

||||

for e in config['app']['trading_exchanges']:

|

||||

config['env']['exchanges'][e]['fee'] = 0

|

||||

get_exchange(e).fee = 0

|

||||

|

||||

backtest_mode.run(start_date, finish_date, chart=chart, tradingview=tradingview, csv=csv,

|

||||

json=json, full_reports=full_reports)

|

||||

|

||||

db.close_connection()

|

||||

|

||||

|

||||

@fastapi_app.post("/optimization")

|

||||

async def optimization(request_json: OptimizationRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

@cli.command()

|

||||

@click.argument('start_date', required=True, type=str)

|

||||

@click.argument('finish_date', required=True, type=str)

|

||||

@click.argument('optimal_total', required=True, type=int)

|

||||

@click.option(

|

||||

'--cpu', default=0, show_default=True,

|

||||

help='The number of CPU cores that Jesse is allowed to use. If set to 0, it will use as many as is available on your machine.')

|

||||

@click.option(

|

||||

'--debug/--no-debug', default=False,

|

||||

help='Displays detailed logs about the genetics algorithm. Use it if you are interested int he genetics algorithm.'

|

||||

)

|

||||

@click.option('--csv/--no-csv', default=False, help='Outputs a CSV file of all DNAs on completion.')

|

||||

@click.option('--json/--no-json', default=False, help='Outputs a JSON file of all DNAs on completion.')

|

||||

def optimize(start_date: str, finish_date: str, optimal_total: int, cpu: int, debug: bool, csv: bool,

|

||||

json: bool) -> None:

|

||||

"""

|

||||

tunes the hyper-parameters of your strategy

|

||||

"""

|

||||

validate_cwd()

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'optimize'

|

||||

|

||||

from jesse.modes.optimize_mode import run as run_optimization

|

||||

register_custom_exception_handler()

|

||||

|

||||

process_manager.add_task(

|

||||

run_optimization,

|

||||

'optimize-' + str(request_json.id),

|

||||

request_json.debug_mode,

|

||||

request_json.config,

|

||||

request_json.routes,

|

||||

request_json.extra_routes,

|

||||

request_json.start_date,

|

||||

request_json.finish_date,

|

||||

request_json.optimal_total,

|

||||

request_json.export_csv,

|

||||

request_json.export_json

|

||||

)

|

||||

# debug flag

|

||||

config['app']['debug_mode'] = debug

|

||||

|

||||

# optimize_mode(start_date, finish_date, optimal_total, cpu, csv, json)

|

||||

from jesse.modes.optimize_mode import optimize_mode

|

||||

|

||||

return JSONResponse({'message': 'Started optimization...'}, status_code=202)

|

||||

optimize_mode(start_date, finish_date, optimal_total, cpu, csv, json)

|

||||

|

||||

|

||||

@fastapi_app.delete("/optimization")

|

||||

def cancel_optimization(request_json: CancelRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

@cli.command()

|

||||

@click.argument('name', required=True, type=str)

|

||||

def make_strategy(name: str) -> None:

|

||||

"""

|

||||

generates a new strategy folder from jesse/strategies/ExampleStrategy

|

||||

"""

|

||||

validate_cwd()

|

||||

from jesse.config import config

|

||||

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

config['app']['trading_mode'] = 'make-strategy'

|

||||

|

||||

process_manager.cancel_process('optimize-' + request_json.id)

|

||||

register_custom_exception_handler()

|

||||

|

||||

return JSONResponse({'message': f'Optimization process with ID of {request_json.id} was requested for termination'}, status_code=202)

|

||||

from jesse.services import strategy_maker

|

||||

|

||||

strategy_maker.generate(name)

|

||||

|

||||

|

||||

@fastapi_app.get("/download/{mode}/{file_type}/{session_id}")

|

||||

def download(mode: str, file_type: str, session_id: str, token: str = Query(...)):

|

||||

if not authenticator.is_valid_token(token):

|

||||

return authenticator.unauthorized_response()

|

||||

@cli.command()

|

||||

@click.argument('name', required=True, type=str)

|

||||

def make_project(name: str) -> None:

|

||||

"""

|

||||

generates a new strategy folder from jesse/strategies/ExampleStrategy

|

||||

"""

|

||||

from jesse.config import config

|

||||

|

||||

from jesse.modes import data_provider

|

||||

config['app']['trading_mode'] = 'make-project'

|

||||

|

||||

return data_provider.download_file(mode, file_type, session_id)

|

||||

register_custom_exception_handler()

|

||||

|

||||

from jesse.services import project_maker

|

||||

|

||||

project_maker.generate(name)

|

||||

|

||||

|

||||

@fastapi_app.delete("/backtest")

|

||||

def cancel_backtest(request_json: CancelRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

@cli.command()

|

||||

@click.option('--dna/--no-dna', default=False,

|

||||

help='Translates DNA into parameters. Used in optimize mode only')

|

||||

def routes(dna: bool) -> None:

|

||||

"""

|

||||

lists all routes

|

||||

"""

|

||||

validate_cwd()

|

||||

from jesse.config import config

|

||||

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

config['app']['trading_mode'] = 'routes'

|

||||

|

||||

process_manager.cancel_process('backtest-' + request_json.id)

|

||||

register_custom_exception_handler()

|

||||

|

||||

return JSONResponse({'message': f'Backtest process with ID of {request_json.id} was requested for termination'}, status_code=202)

|

||||

from jesse.modes import routes_mode

|

||||

|

||||

routes_mode.run(dna)

|

||||

|

||||

|

||||

@fastapi_app.on_event("shutdown")

|

||||

def shutdown_event():

|

||||

from jesse.services.db import database

|

||||

database.close_connection()

|

||||

live_package_exists = True

|

||||

try:

|

||||

import jesse_live

|

||||

except ModuleNotFoundError:

|

||||

live_package_exists = False

|

||||

if live_package_exists:

|

||||

# @cli.command()

|

||||

# def collect() -> None:

|

||||

# """

|

||||

# fetches streamed market data such as tickers, trades, and orderbook from

|

||||

# the WS connection and stores them into the database for later research.

|

||||

# """

|

||||

# validate_cwd()

|

||||

#

|

||||

# # set trading mode

|

||||

# from jesse.config import config

|

||||

# config['app']['trading_mode'] = 'collect'

|

||||

#

|

||||

# register_custom_exception_handler()

|

||||

#

|

||||

# from jesse_live.live.collect_mode import run

|

||||

#

|

||||

# run()

|

||||

|

||||

|

||||

if HAS_LIVE_TRADE_PLUGIN:

|

||||

from jesse.services.web import fastapi_app, LiveRequestJson, LiveCancelRequestJson, GetCandlesRequestJson, \

|

||||

GetLogsRequestJson, GetOrdersRequestJson

|

||||

from jesse.services import auth as authenticator

|

||||

|

||||

@fastapi_app.post("/live")

|

||||

def live(request_json: LiveRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse import validate_cwd

|

||||

|

||||

# dev_mode is used only by developers so it doesn't have to be a supported parameter

|

||||

dev_mode: bool = False

|

||||

|

||||

@cli.command()

|

||||

@click.option('--testdrive/--no-testdrive', default=False)

|

||||

@click.option('--debug/--no-debug', default=False)

|

||||

@click.option('--dev/--no-dev', default=False)

|

||||

def live(testdrive: bool, debug: bool, dev: bool) -> None:

|

||||

"""

|

||||

trades in real-time on exchange with REAL money

|

||||

"""

|

||||

validate_cwd()

|

||||

|

||||

# set trading mode

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'livetrade'

|

||||

config['app']['is_test_driving'] = testdrive

|

||||

|

||||

register_custom_exception_handler()

|

||||

|

||||

# debug flag

|

||||

config['app']['debug_mode'] = debug

|

||||

|

||||

from jesse_live import init

|

||||

from jesse.services.selectors import get_exchange

|

||||

live_config = locate('live-config.config')

|

||||

|

||||

# validate that the "live-config.py" file exists

|

||||

if live_config is None:

|

||||

jh.error('You\'re either missing the live-config.py file or haven\'t logged in. Run "jesse login" to fix it.', True)

|

||||

jh.terminate_app()

|

||||

|

||||

# inject live config

|

||||

init(config, live_config)

|

||||

|

||||

# execute live session

|

||||

from jesse_live import live_mode

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

trading_mode = 'livetrade' if request_json.paper_mode is False else 'papertrade'

|

||||

|

||||

process_manager.add_task(

|

||||

live_mode.run,

|

||||

f'{trading_mode}-' + str(request_json.id),

|

||||

request_json.debug_mode,

|

||||

dev_mode,

|

||||

request_json.config,

|

||||

request_json.routes,

|

||||

request_json.extra_routes,

|

||||

trading_mode,

|

||||

)

|

||||

|

||||

mode = 'live' if request_json.paper_mode is False else 'paper'

|

||||

|

||||

return JSONResponse({'message': f"Started {mode} trading..."}, status_code=202)

|

||||

from jesse_live.live_mode import run

|

||||

run(dev)

|

||||

|

||||

|

||||

@fastapi_app.delete("/live")

|

||||

def cancel_backtest(request_json: LiveCancelRequestJson, authorization: Optional[str] = Header(None)):

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse.services.multiprocessing import process_manager

|

||||

|

||||

trading_mode = 'livetrade' if request_json.paper_mode is False else 'papertrade'

|

||||

|

||||

process_manager.cancel_process(f'{trading_mode}-' + request_json.id)

|

||||

|

||||

return JSONResponse({'message': f'Live process with ID of {request_json.id} terminated.'}, status_code=200)

|

||||

|

||||

|

||||

@fastapi_app.post('/get-candles')

|

||||

def get_candles(json_request: GetCandlesRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

|

||||

from jesse import validate_cwd

|

||||

|

||||

@cli.command()

|

||||

@click.option('--debug/--no-debug', default=False)

|

||||

@click.option('--dev/--no-dev', default=False)

|

||||

def paper(debug: bool, dev: bool) -> None:

|

||||

"""

|

||||

trades in real-time on exchange with PAPER money

|

||||

"""

|

||||

validate_cwd()

|

||||

|

||||

from jesse.modes.data_provider import get_candles as gc

|

||||

# set trading mode

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'papertrade'

|

||||

|

||||

arr = gc(json_request.exchange, json_request.symbol, json_request.timeframe)

|

||||

register_custom_exception_handler()

|

||||

|

||||

return JSONResponse({

|

||||

'id': json_request.id,

|

||||

'data': arr

|

||||

}, status_code=200)

|

||||

# debug flag

|

||||

config['app']['debug_mode'] = debug

|

||||

|

||||

from jesse_live import init

|

||||

from jesse.services.selectors import get_exchange

|

||||

live_config = locate('live-config.config')

|

||||

|

||||

@fastapi_app.post('/get-logs')

|

||||

def get_logs(json_request: GetLogsRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||

if not authenticator.is_valid_token(authorization):

|

||||

return authenticator.unauthorized_response()

|

||||

# validate that the "live-config.py" file exists

|

||||

if live_config is None:

|

||||

jh.error('You\'re either missing the live-config.py file or haven\'t logged in. Run "jesse login" to fix it.', True)

|

||||

jh.terminate_app()

|

||||

|

||||

from jesse_live.services.data_provider import get_logs as gl

|

||||

# inject live config

|

||||

init(config, live_config)

|

||||

|

||||

arr = gl(json_request.session_id, json_request.type)

|

||||

# execute live session

|

||||

from jesse_live.live_mode import run

|

||||

run(dev)

|

||||

|

||||

return JSONResponse({

|

||||

'id': json_request.id,

|

||||

'data': arr

|

||||

}, status_code=200)

|

||||

@cli.command()

|

||||

@click.option('--email', prompt='Email')

|

||||

@click.option('--password', prompt='Password', hide_input=True)

|

||||

def login(email, password) -> None:

|

||||

"""

|

||||

(Initially) Logins to the website.

|

||||

"""

|

||||

validate_cwd()

|

||||

|

||||

# set trading mode

|

||||

from jesse.config import config

|

||||

config['app']['trading_mode'] = 'login'

|

||||

|

||||

@fastapi_app.post('/get-orders')

|

||||

def get_orders(json_request: GetOrdersRequestJson, authorization: Optional[str] = Header(None)) -> JSONResponse:

|

||||