mirror of

https://github.com/promptfoo/promptfoo.git

synced 2023-08-15 01:10:51 +03:00

Simplify API and add support for unified test suite definition (#14)

This commit is contained in:

196

README.md

196

README.md

@@ -32,21 +32,21 @@ It works on the command line too:

|

||||

|

||||

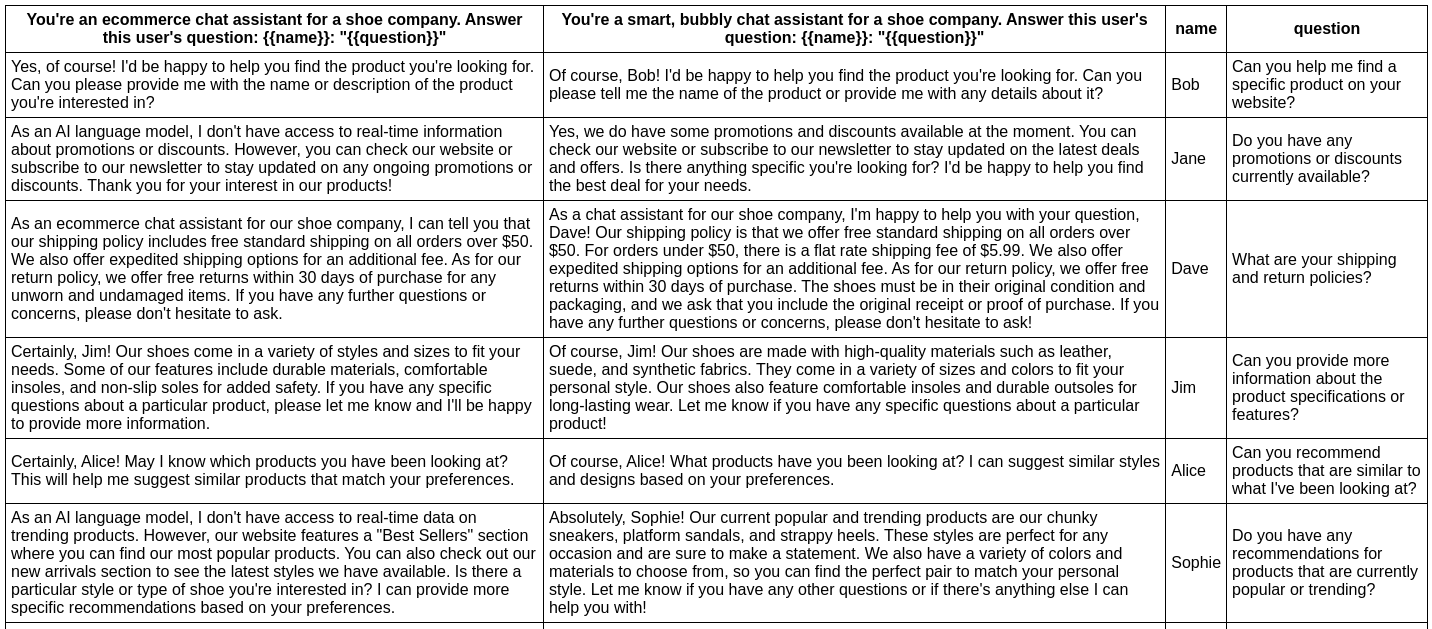

Start by establishing a handful of test cases - core use cases and failure cases that you want to ensure your prompt can handle.

|

||||

|

||||

As you explore modifications to the prompt, use `promptfoo eval` to rate all outputs. This ensures the prompt is actually improving overall.

|

||||

As you explore modifications to the prompt, use `promptfoo eval` to rate all outputs. This ensures the prompt is actually improving overall.

|

||||

|

||||

As you collect more examples and establish a user feedback loop, continue to build the pool of test cases.

|

||||

|

||||

<img width="772" alt="LLM ops" src="https://github.com/typpo/promptfoo/assets/310310/cf0461a7-2832-4362-9fbb-4ebd911d06ff">

|

||||

|

||||

## Usage (command line & web viewer)

|

||||

## Usage

|

||||

|

||||

To get started, run the following command:

|

||||

To get started, run this command:

|

||||

|

||||

```

|

||||

npx promptfoo init

|

||||

```

|

||||

|

||||

This will create some templates in your current directory: `prompts.txt`, `vars.csv`, and `promptfooconfig.js`.

|

||||

This will create some placeholders in your current directory: `prompts.txt` and `promptfooconfig.yaml`.

|

||||

|

||||

After editing the prompts and variables to your liking, run the eval command to kick off an evaluation:

|

||||

|

||||

@@ -54,20 +54,75 @@ After editing the prompts and variables to your liking, run the eval command to

|

||||

npx promptfoo eval

|

||||

```

|

||||

|

||||

If you're looking to customize your usage, you have a wide set of parameters at your disposal. See the [Configuration docs](https://www.promptfoo.dev/docs/configuration/parameters) for more detail:

|

||||

### Configuration

|

||||

|

||||

| Option | Description |

|

||||

| ----------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| `-p, --prompts <paths...>` | Paths to prompt files, directory, or glob |

|

||||

| `-r, --providers <name or path...>` | One of: openai:chat, openai:completion, openai:model-name, localai:chat:model-name, localai:completion:model-name. See [API providers](https://www.promptfoo.dev/docs/configuration/providers) |

|

||||

| `-o, --output <path>` | Path to output file (csv, json, yaml, html) |

|

||||

| `-v, --vars <path>` | Path to file with prompt variables (csv, json, yaml) |

|

||||

| `-c, --config <path>` | Path to configuration file. `promptfooconfig.js[on]` is automatically loaded if present |

|

||||

| `-j, --max-concurrency <number>` | Maximum number of concurrent API calls |

|

||||

| `--table-cell-max-length <number>` | Truncate console table cells to this length |

|

||||

| `--prompt-prefix <path>` | This prefix is prepended to every prompt |

|

||||

| `--prompt-suffix <path>` | This suffix is append to every prompt |

|

||||

| `--grader` | Provider that will grade outputs, if you are using [LLM grading](https://www.promptfoo.dev/docs/configuration/expected-outputs) |

|

||||

The YAML configuration format runs each prompt through a series of example inputs (aka "test case") and checks if they meet requirements (aka "assert").

|

||||

|

||||

See the [Configuration docs](https://www.promptfoo.dev/docs/configuration/parameters) for more detail.

|

||||

|

||||

```yaml

|

||||

prompts: [prompts.txt]

|

||||

providers: [openai:gpt-3.5-turbo]

|

||||

tests:

|

||||

- description: First test case - automatic review

|

||||

vars:

|

||||

var1: first variable's value

|

||||

var2: another value

|

||||

var3: some other value

|

||||

assert:

|

||||

- type: equality

|

||||

value: expected LLM output goes here

|

||||

- type: function

|

||||

value: output.includes('some text')

|

||||

|

||||

- description: Second test case - manual review

|

||||

# Test cases don't need assertions if you prefer to review the output yourself

|

||||

vars:

|

||||

var1: new value

|

||||

var2: another value

|

||||

var3: third value

|

||||

|

||||

- description: Third test case - other types of automatic review

|

||||

vars:

|

||||

var1: yet another value

|

||||

var2: and another

|

||||

var3: dear llm, please output your response in json format

|

||||

assert:

|

||||

- type: contains-json

|

||||

- type: similarity

|

||||

value: ensures that output is semantically similar to this text

|

||||

- type: llm-rubric

|

||||

value: ensure that output contains a reference to X

|

||||

```

|

||||

|

||||

### Tests on spreadsheet

|

||||

|

||||

Some people prefer to configure their LLM tests in a CSV. In that case, the config is pretty simple:

|

||||

|

||||

```yaml

|

||||

prompts: [prompts.txt]

|

||||

providers: [openai:gpt-3.5-turbo]

|

||||

tests: tests.csv

|

||||

```

|

||||

|

||||

See [example CSV](https://github.com/typpo/promptfoo/blob/main/examples/simple-test/tests.csv).

|

||||

|

||||

### Command-line

|

||||

|

||||

If you're looking to customize your usage, you have a wide set of parameters at your disposal.

|

||||

|

||||

| Option | Description |

|

||||

| ----------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

|

||||

| `-p, --prompts <paths...>` | Paths to [prompt files](https://promptfoo.dev/docs/configuration/parameters#prompt-files), directory, or glob |

|

||||

| `-r, --providers <name or path...>` | One of: openai:chat, openai:completion, openai:model-name, localai:chat:model-name, localai:completion:model-name. See [API providers](https://promptfoo.dev/docs/configuration/providers) |

|

||||

| `-o, --output <path>` | Path to [output file](https://promptfoo.dev/docs/configuration/parameters#output-file) (csv, json, yaml, html) |

|

||||

| `--tests <path>` | Path to [external test file](https://promptfoo.dev/docs/configurationexpected-outputsassertions#load-an-external-tests-file) |

|

||||

| `-c, --config <path>` | Path to [configuration file](https://promptfoo.dev/docs/configuration/guide). `promptfooconfig.js/json/yaml` is automatically loaded if present |

|

||||

| `-j, --max-concurrency <number>` | Maximum number of concurrent API calls |

|

||||

| `--table-cell-max-length <number>` | Truncate console table cells to this length |

|

||||

| `--prompt-prefix <path>` | This prefix is prepended to every prompt |

|

||||

| `--prompt-suffix <path>` | This suffix is append to every prompt |

|

||||

| `--grader` | [Provider](https://promptfoo.dev/docs/configuration/providers) that will conduct the evaluation, if you are [using LLM to grade your output](https://promptfoo.dev/docs/configuration/expected-outputs#llm-evaluation) |

|

||||

|

||||

After running an eval, you may optionally use the `view` command to open the web viewer:

|

||||

|

||||

@@ -79,10 +134,10 @@ npx promptfoo view

|

||||

|

||||

#### Prompt quality

|

||||

|

||||

In this example, we evaluate whether adding adjectives to the personality of an assistant bot affects the responses:

|

||||

In [this example](https://github.com/typpo/promptfoo/tree/main/examples/assistant-cli), we evaluate whether adding adjectives to the personality of an assistant bot affects the responses:

|

||||

|

||||

```bash

|

||||

npx promptfoo eval -p prompts.txt -v vars.csv -r openai:gpt-3.5-turbo

|

||||

npx promptfoo eval -p prompts.txt -r openai:gpt-3.5-turbo -t tests.csv

|

||||

```

|

||||

|

||||

<!--

|

||||

@@ -93,15 +148,13 @@ npx promptfoo eval -p prompts.txt -v vars.csv -r openai:gpt-3.5-turbo

|

||||

|

||||

This command will evaluate the prompts in `prompts.txt`, substituing the variable values from `vars.csv`, and output results in your terminal.

|

||||

|

||||

Have a look at the setup and full output [here](https://github.com/typpo/promptfoo/tree/main/examples/assistant-cli).

|

||||

|

||||

You can also output a nice [spreadsheet](https://docs.google.com/spreadsheets/d/1nanoj3_TniWrDl1Sj-qYqIMD6jwm5FBy15xPFdUTsmI/edit?usp=sharing), [JSON](https://github.com/typpo/promptfoo/blob/main/examples/simple-cli/output.json), YAML, or an HTML file:

|

||||

|

||||

|

||||

|

||||

#### Model quality

|

||||

|

||||

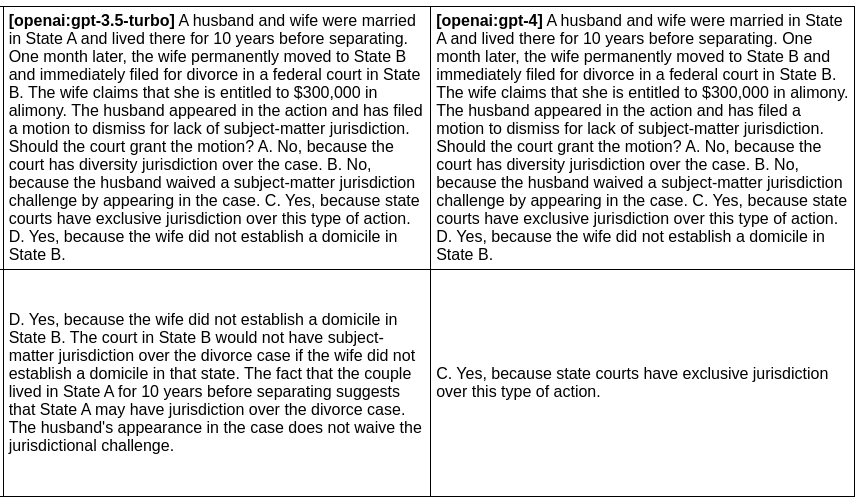

In this example, we evaluate the difference between GPT 3 and GPT 4 outputs for a given prompt:

|

||||

In the [next example](https://github.com/typpo/promptfoo/tree/main/examples/gpt-3.5-vs-4), we evaluate the difference between GPT 3 and GPT 4 outputs for a given prompt:

|

||||

|

||||

```bash

|

||||

npx promptfoo eval -p prompts.txt -r openai:gpt-3.5-turbo openai:gpt-4 -o output.html

|

||||

@@ -111,19 +164,46 @@ Produces this HTML table:

|

||||

|

||||

|

||||

|

||||

Full setup and output [here](https://github.com/typpo/promptfoo/tree/main/examples/gpt-3.5-vs-4).

|

||||

|

||||

## Usage (node package)

|

||||

|

||||

You can also use `promptfoo` as a library in your project by importing the `evaluate` function. The function takes the following parameters:

|

||||

|

||||

- `providers`: a list of provider strings or `ApiProvider` objects, or just a single string or `ApiProvider`.

|

||||

- `options`: the prompts and variables you want to test:

|

||||

- `testSuite`: the Javascript equivalent of the promptfooconfig.yaml

|

||||

|

||||

```typescript

|

||||

{

|

||||

prompts: string[];

|

||||

interface TestSuiteConfig {

|

||||

providers: string[]; // Valid provider name (e.g. openai:gpt-3.5-turbo)

|

||||

prompts: string[]; // List of prompts

|

||||

tests: string | TestCase[]; // Path to a CSV file, or list of test cases

|

||||

|

||||

defaultTest?: Omit<TestCase, 'description'>; // Optional: add default vars and assertions on test case

|

||||

outputPath?: string; // Optional: write results to file

|

||||

}

|

||||

|

||||

interface TestCase {

|

||||

description?: string;

|

||||

vars?: Record<string, string>;

|

||||

assert?: Assertion[];

|

||||

|

||||

prompt?: PromptConfig;

|

||||

grading?: GradingConfig;

|

||||

}

|

||||

|

||||

interface Assertion {

|

||||

type: 'equality' | 'is-json' | 'contains-json' | 'function' | 'similarity' | 'llm-rubric';

|

||||

value?: string;

|

||||

threshold?: number; // For similarity assertions

|

||||

provider?: ApiProvider; // For assertions that require an LLM provider

|

||||

}

|

||||

```

|

||||

|

||||

- `options`: misc options related to how the tests are run

|

||||

|

||||

```typescript

|

||||

interface EvaluateOptions {

|

||||

maxConcurrency?: number;

|

||||

showProgressBar?: boolean;

|

||||

generateSuggestions?: boolean;

|

||||

}

|

||||

```

|

||||

|

||||

@@ -134,61 +214,31 @@ You can also use `promptfoo` as a library in your project by importing the `eval

|

||||

```js

|

||||

import promptfoo from 'promptfoo';

|

||||

|

||||

const options = {

|

||||

const results = await promptfoo.evaluate({

|

||||

prompts: ['Rephrase this in French: {{body}}', 'Rephrase this like a pirate: {{body}}'],

|

||||

vars: [{ body: 'Hello world' }, { body: "I'm hungry" }],

|

||||

};

|

||||

|

||||

(async () => {

|

||||

const summary = await promptfoo.evaluate('openai:gpt-3.5-turbo', options);

|

||||

console.log(summary);

|

||||

})();

|

||||

```

|

||||

|

||||

This code imports the `promptfoo` library, defines the evaluation options, and then calls the `evaluate` function with these options. The results are logged to the console:

|

||||

|

||||

```js

|

||||

{

|

||||

"results": [

|

||||

providers: ['openai:gpt-3.5-turbo'],

|

||||

tests: [

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: Hello world",

|

||||

"display": "Rephrase this in French: {{body}}"

|

||||

vars: {

|

||||

body: 'Hello world',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

body: "I'm hungry",

|

||||

},

|

||||

"vars": {

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"total": 19,

|

||||

"prompt": 16,

|

||||

"completion": 3

|

||||

}

|

||||

}

|

||||

},

|

||||

// ...

|

||||

],

|

||||

"stats": {

|

||||

"successes": 4,

|

||||

"failures": 0,

|

||||

"tokenUsage": {

|

||||

"total": 120,

|

||||

"prompt": 72,

|

||||

"completion": 48

|

||||

}

|

||||

},

|

||||

"table": [

|

||||

// ...

|

||||

]

|

||||

}

|

||||

});

|

||||

```

|

||||

|

||||

[See full example here](https://github.com/typpo/promptfoo/tree/main/examples/simple-import)

|

||||

This code imports the `promptfoo` library, defines the evaluation options, and then calls the `evaluate` function with these options.

|

||||

|

||||

See the full example [here](https://github.com/typpo/promptfoo/tree/main/examples/simple-import), which includes an example results object.

|

||||

|

||||

## Configuration

|

||||

|

||||

- **[Setting up an eval](https://promptfoo.dev/docs/configuration/parameters)**: Learn more about how to set up prompt files, vars file, output, etc.

|

||||

- **[Main guide](https://promptfoo.dev/docs/configuration/guide)**: Learn about how to configure your YAML file, setup prompt files, etc.

|

||||

- **[Configuring test cases](https://promptfoo.dev/docs/configuration/expected-outputs)**: Learn more about how to configure expected outputs and test assertions.

|

||||

|

||||

## Installation

|

||||

|

||||

@@ -1,7 +1,13 @@

|

||||

This example shows how you can use promptfoo to generate a side-by-side eval of two prompts for an ecommerce chat bot.

|

||||

|

||||

Run:

|

||||

Configuration is in `promptfooconfig.yaml`. Run:

|

||||

|

||||

```

|

||||

promptfoo eval -p prompts.txt --vars vars.csv -r openai:chat

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Full command-line equivalent:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --tests tests.csv --providers openai:gpt-3.5-turbo --output output.json

|

||||

```

|

||||

|

||||

3

examples/assistant-cli/promptfooconfig.yaml

Normal file

3

examples/assistant-cli/promptfooconfig.yaml

Normal file

@@ -0,0 +1,3 @@

|

||||

prompts: prompts.txt

|

||||

providers: openai:gpt-3.5-turbo

|

||||

tests: tests.csv

|

||||

13

examples/custom-provider/README.md

Normal file

13

examples/custom-provider/README.md

Normal file

@@ -0,0 +1,13 @@

|

||||

This example uses a custom API provider in `customProvider.js`. It also uses CSV test cases.

|

||||

|

||||

Run:

|

||||

|

||||

```

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Full command-line equivalent:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --tests vars.csv --providers openai:chat --output output.json --providers customProvider.js

|

||||

```

|

||||

3

examples/custom-provider/promptfooconfig.yaml

Normal file

3

examples/custom-provider/promptfooconfig.yaml

Normal file

@@ -0,0 +1,3 @@

|

||||

prompts: prompts.txt

|

||||

providers: customProvider.js

|

||||

tests: vars.csv

|

||||

@@ -1,7 +1,13 @@

|

||||

This example shows how you can use promptfoo to generate a side-by-side eval of multiple prompts to compare GPT 3 and GPT 4 outputs.

|

||||

|

||||

Run:

|

||||

Configure in `promptfooconfig.yaml`. Run with:

|

||||

|

||||

```

|

||||

promptfoo eval -p prompts.txt -r openai:gpt-3.5-turbo openai:gpt-4

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Full command-line equivalent:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --providers openai:gpt-3.5-turbo openai:gpt-4

|

||||

```

|

||||

|

||||

4

examples/gpt-3.5-vs-4/promptfooconfig.yaml

Normal file

4

examples/gpt-3.5-vs-4/promptfooconfig.yaml

Normal file

@@ -0,0 +1,4 @@

|

||||

prompts: prompts.txt

|

||||

providers:

|

||||

- openai:gpt-3.5-turbo

|

||||

- openai:gpt-4

|

||||

5

examples/js-config/README.md

Normal file

5

examples/js-config/README.md

Normal file

@@ -0,0 +1,5 @@

|

||||

This example is pre-configured in `promptfooconfig.js`. That means you can just run:

|

||||

|

||||

```

|

||||

promptfoo eval

|

||||

```

|

||||

92

examples/js-config/output.html

Normal file

92

examples/js-config/output.html

Normal file

@@ -0,0 +1,92 @@

|

||||

<!DOCTYPE html>

|

||||

<html>

|

||||

<head>

|

||||

<meta charset="utf-8" />

|

||||

<meta name="viewport" content="width=device-width" />

|

||||

<title>Table Output</title>

|

||||

<style>

|

||||

body {

|

||||

font-family: -apple-system, BlinkMacSystemFont, Segoe UI, Roboto, Helvetica, Arial,

|

||||

sans-serif;

|

||||

}

|

||||

table,

|

||||

th,

|

||||

td {

|

||||

border: 1px solid black;

|

||||

border-collapse: collapse;

|

||||

text-align: left;

|

||||

word-break: break-all;

|

||||

}

|

||||

th,

|

||||

td {

|

||||

padding: 5px;

|

||||

min-width: 200px;

|

||||

}

|

||||

|

||||

tr > td[data-content^='[PASS]'] {

|

||||

color: green;

|

||||

}

|

||||

tr > td[data-content^='[FAIL]'] {

|

||||

color: #ad0000;

|

||||

}

|

||||

</style>

|

||||

</head>

|

||||

<body>

|

||||

<table>

|

||||

<thead>

|

||||

<th>Rephrase this in {{language}}: {{body}}</th>

|

||||

|

||||

<th>Translate this to conversational {{language}}: {{body}}</th>

|

||||

|

||||

<th>body</th>

|

||||

|

||||

<th>language</th>

|

||||

</thead>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Ahoy thar, world!">Ahoy thar, world!</td>

|

||||

|

||||

<td data-content="Ahoy thar world!">Ahoy thar world!</td>

|

||||

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Arrr, me belly be empty and yearnin' for grub.">

|

||||

Arrr, me belly be empty and yearnin' for grub.

|

||||

</td>

|

||||

|

||||

<td data-content="Arrr, me belly be rumblin'! I be needin' some grub!">

|

||||

Arrr, me belly be rumblin'! I be needin' some grub!

|

||||

</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

</body>

|

||||

</html>

|

||||

181

examples/js-config/output.json

Normal file

181

examples/js-config/output.json

Normal file

@@ -0,0 +1,181 @@

|

||||

{

|

||||

"version": 1,

|

||||

"results": [

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: Hello world",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"cached": 19

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational French: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"cached": 20

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: I'm hungry",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"cached": 24

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational French: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"cached": 25

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: Hello world",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar, world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: I'm hungry",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be empty and yearnin' for grub.",

|

||||

"tokenUsage": {

|

||||

"cached": 33

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be rumblin'! I be needin' some grub!",

|

||||

"tokenUsage": {

|

||||

"cached": 39

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

}

|

||||

],

|

||||

"stats": {

|

||||

"successes": 8,

|

||||

"failures": 0,

|

||||

"tokenUsage": {

|

||||

"total": 0,

|

||||

"prompt": 0,

|

||||

"completion": 0,

|

||||

"cached": 206

|

||||

}

|

||||

},

|

||||

"table": {

|

||||

"head": {

|

||||

"prompts": [

|

||||

"Rephrase this in {{language}}: {{body}}",

|

||||

"Translate this to conversational {{language}}: {{body}}"

|

||||

],

|

||||

"vars": ["body", "language"]

|

||||

},

|

||||

"body": [

|

||||

{

|

||||

"outputs": ["Bonjour le monde", "Bonjour le monde"],

|

||||

"vars": ["Hello world", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["J'ai faim.", "J'ai faim."],

|

||||

"vars": ["I'm hungry", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["Ahoy thar, world!", "Ahoy thar world!"],

|

||||

"vars": ["Hello world", "Pirate"]

|

||||

},

|

||||

{

|

||||

"outputs": [

|

||||

"Arrr, me belly be empty and yearnin' for grub.",

|

||||

"Arrr, me belly be rumblin'! I be needin' some grub!"

|

||||

],

|

||||

"vars": ["I'm hungry", "Pirate"]

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

31

examples/js-config/promptfooconfig.js

Normal file

31

examples/js-config/promptfooconfig.js

Normal file

@@ -0,0 +1,31 @@

|

||||

module.exports = {

|

||||

description: 'A translator built with LLM',

|

||||

prompts: ['prompts.txt'],

|

||||

providers: ['openai:gpt-3.5-turbo'],

|

||||

tests: [

|

||||

{

|

||||

vars: {

|

||||

language: 'French',

|

||||

body: 'Hello world',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

language: 'French',

|

||||

body: "I'm hungry",

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

language: 'Pirate',

|

||||

body: 'Hello world',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

language: 'Pirate',

|

||||

body: "I'm hungry",

|

||||

},

|

||||

},

|

||||

],

|

||||

};

|

||||

3

examples/js-config/prompts.txt

Normal file

3

examples/js-config/prompts.txt

Normal file

@@ -0,0 +1,3 @@

|

||||

Rephrase this in {{language}}: {{body}}

|

||||

---

|

||||

Translate this to conversational {{language}}: {{body}}

|

||||

22

examples/node-package/index.js

Normal file

22

examples/node-package/index.js

Normal file

@@ -0,0 +1,22 @@

|

||||

import promptfoo from '../../dist/index.js';

|

||||

|

||||

(async () => {

|

||||

const results = await promptfoo.evaluate({

|

||||

prompts: ['Rephrase this in French: {{body}}', 'Rephrase this like a pirate: {{body}}'],

|

||||

providers: ['openai:gpt-3.5-turbo'],

|

||||

tests: [

|

||||

{

|

||||

vars: {

|

||||

body: 'Hello world',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

body: "I'm hungry",

|

||||

},

|

||||

},

|

||||

],

|

||||

});

|

||||

console.log('RESULTS:');

|

||||

console.log(results);

|

||||

})();

|

||||

@@ -1,9 +1,15 @@

|

||||

This example shows how you can have an LLM grade its own output according to predefined expectations.

|

||||

|

||||

Configuration is in promptfooconfig.js

|

||||

Identical configurations are provided in `promptfooconfig.js` and `promptfooconfig.yaml`.

|

||||

|

||||

Run:

|

||||

|

||||

```

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

You can also define the tests in a CSV file:

|

||||

|

||||

```

|

||||

promptfoo eval --tests tests.csv

|

||||

```

|

||||

|

||||

@@ -1,6 +1,75 @@

|

||||

module.exports = {

|

||||

providers: ['openai:chat:gpt-3.5-turbo'],

|

||||

prompts: ['./prompts.txt'],

|

||||

vars: './vars.csv',

|

||||

grader: 'openai:chat:gpt-4',

|

||||

prompts: 'prompts.txt',

|

||||

providers: 'openai:gpt-3.5-turbo',

|

||||

defaultTest: {

|

||||

assert: [

|

||||

{

|

||||

type: 'llm-rubric',

|

||||

value: 'Do not mention that you are an AI or chat assistant',

|

||||

},

|

||||

],

|

||||

},

|

||||

tests: [

|

||||

{

|

||||

vars: {

|

||||

name: 'Bob',

|

||||

question: 'Can you help me find a specific product on your website?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Jane',

|

||||

question: 'Do you have any promotions or discounts currently available?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Dave',

|

||||

question: 'What are your shipping and return policies?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Jim',

|

||||

question: 'Can you provide more information about the product specifications or features?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Alice',

|

||||

question: "Can you recommend products that are similar to what I've been looking at?",

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Sophie',

|

||||

question:

|

||||

'Do you have any recommendations for products that are currently popular or trending?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Ben',

|

||||

question: 'Can you check the availability of a product at a specific store location?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Jessie',

|

||||

question: 'How can I track my order after it has been shipped?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Kim',

|

||||

question: 'What payment methods do you accept?',

|

||||

},

|

||||

},

|

||||

{

|

||||

vars: {

|

||||

name: 'Emily',

|

||||

question: "Can you help me with a problem I'm having with my account or order?",

|

||||

},

|

||||

},

|

||||

],

|

||||

};

|

||||

|

||||

37

examples/self-grading/promptfooconfig.yaml

Normal file

37

examples/self-grading/promptfooconfig.yaml

Normal file

@@ -0,0 +1,37 @@

|

||||

prompts: prompts.txt

|

||||

providers: openai:gpt-3.5-turbo

|

||||

defaultTest:

|

||||

assert:

|

||||

- type: llm-rubric

|

||||

value: Do not mention that you are an AI or chat assistant

|

||||

tests:

|

||||

- vars:

|

||||

name: Bob

|

||||

question: Can you help me find a specific product on your website?

|

||||

- vars:

|

||||

name: Jane

|

||||

question: Do you have any promotions or discounts currently available?

|

||||

- vars:

|

||||

name: Dave

|

||||

question: What are your shipping and return policies?

|

||||

- vars:

|

||||

name: Jim

|

||||

question: Can you provide more information about the product specifications or features?

|

||||

- vars:

|

||||

name: Alice

|

||||

question: Can you recommend products that are similar to what I've been looking at?

|

||||

- vars:

|

||||

name: Sophie

|

||||

question: Do you have any recommendations for products that are currently popular or trending?

|

||||

- vars:

|

||||

name: Ben

|

||||

question: Can you check the availability of a product at a specific store location?

|

||||

- vars:

|

||||

name: Jessie

|

||||

question: How can I track my order after it has been shipped?

|

||||

- vars:

|

||||

name: Kim

|

||||

question: What payment methods do you accept?

|

||||

- vars:

|

||||

name: Emily

|

||||

question: Can you help me with a problem I'm having with my account or order?

|

||||

@@ -1,5 +0,0 @@

|

||||

Run:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --vars vars.csv --providers openai:chat --output output.json --providers customProvider.js

|

||||

```

|

||||

@@ -1,11 +1,11 @@

|

||||

This example is pre-configured in `promptfooconfig.js`. That means you can just run:

|

||||

This example is pre-configured in `promptfooconfig.yaml` (both identical examples). That means you can just run:

|

||||

|

||||

```

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Here's the full command:

|

||||

To override prompts, providers, output, etc. you can run:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --vars vars.csv --providers openai:chat --output output.json

|

||||

promptfoo eval --prompts prompts.txt --providers openai:chat --output output.json

|

||||

```

|

||||

|

||||

@@ -14,39 +14,77 @@

|

||||

td {

|

||||

border: 1px solid black;

|

||||

border-collapse: collapse;

|

||||

text-align: left;

|

||||

word-break: break-all;

|

||||

}

|

||||

th,

|

||||

td {

|

||||

padding: 5px;

|

||||

min-width: 200px;

|

||||

}

|

||||

|

||||

tr > td[data-content^='[PASS]'] {

|

||||

color: green;

|

||||

}

|

||||

tr > td[data-content^='[FAIL]'] {

|

||||

color: #ad0000;

|

||||

}

|

||||

</style>

|

||||

</head>

|

||||

<body>

|

||||

<table>

|

||||

<thead>

|

||||

<th>Rephrase this in French: {{body}}</th>

|

||||

<th>Rephrase this in {{language}}: {{body}}</th>

|

||||

|

||||

<th>Rephrase this like a pirate: {{body}}</th>

|

||||

<th>Translate this to conversational {{language}}: {{body}}</th>

|

||||

|

||||

<th>body</th>

|

||||

|

||||

<th>language</th>

|

||||

</thead>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td>Bonjour le monde</td>

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td>Ahoy thar, me hearties! Avast ye, world!</td>

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td>Hello world</td>

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td>J'ai faim.</td>

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td>

|

||||

Arrr, me belly be empty and me throat be parched! I be needin' some grub, matey!

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Ahoy thar, world!">Ahoy thar, world!</td>

|

||||

|

||||

<td data-content="Ahoy thar world!">Ahoy thar world!</td>

|

||||

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Arrr, me belly be empty and yearnin' for grub.">

|

||||

Arrr, me belly be empty and yearnin' for grub.

|

||||

</td>

|

||||

|

||||

<td>I'm hungry</td>

|

||||

<td data-content="Arrr, me belly be rumblin'! I be needin' some grub!">

|

||||

Arrr, me belly be rumblin'! I be needin' some grub!

|

||||

</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|

||||

@@ -1,19 +1,36 @@

|

||||

{

|

||||

"version": 1,

|

||||

"results": [

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: Hello world",

|

||||

"display": "Rephrase this in French: {{body}}"

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"total": 19,

|

||||

"prompt": 16,

|

||||

"completion": 3

|

||||

"cached": 19

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational French: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"cached": 20

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

@@ -21,74 +38,144 @@

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: I'm hungry",

|

||||

"display": "Rephrase this in French: {{body}}"

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"total": 24,

|

||||

"prompt": 19,

|

||||

"completion": 5

|

||||

"cached": 24

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this like a pirate: Hello world",

|

||||

"display": "Rephrase this like a pirate: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar, me hearties! Avast ye, world!",

|

||||

"tokenUsage": {

|

||||

"total": 32,

|

||||

"prompt": 17,

|

||||

"completion": 15

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this like a pirate: I'm hungry",

|

||||

"display": "Rephrase this like a pirate: {{body}}"

|

||||

"raw": "Translate this to conversational French: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be empty and me throat be parched! I be needin' some grub, matey!",

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"total": 45,

|

||||

"prompt": 20,

|

||||

"completion": 25

|

||||

"cached": 25

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: Hello world",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar, world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: I'm hungry",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be empty and yearnin' for grub.",

|

||||

"tokenUsage": {

|

||||

"cached": 33

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be rumblin'! I be needin' some grub!",

|

||||

"tokenUsage": {

|

||||

"cached": 39

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

}

|

||||

],

|

||||

"stats": {

|

||||

"successes": 4,

|

||||

"successes": 8,

|

||||

"failures": 0,

|

||||

"tokenUsage": {

|

||||

"total": 120,

|

||||

"prompt": 72,

|

||||

"completion": 48

|

||||

"total": 0,

|

||||

"prompt": 0,

|

||||

"completion": 0,

|

||||

"cached": 206

|

||||

}

|

||||

},

|

||||

"table": [

|

||||

["Rephrase this in French: {{body}}", "Rephrase this like a pirate: {{body}}", "body"],

|

||||

["Bonjour le monde", "Ahoy thar, me hearties! Avast ye, world!", "Hello world"],

|

||||

[

|

||||

"J'ai faim.",

|

||||

"Arrr, me belly be empty and me throat be parched! I be needin' some grub, matey!",

|

||||

"I'm hungry"

|

||||

"table": {

|

||||

"head": {

|

||||

"prompts": [

|

||||

"Rephrase this in {{language}}: {{body}}",

|

||||

"Translate this to conversational {{language}}: {{body}}"

|

||||

],

|

||||

"vars": ["body", "language"]

|

||||

},

|

||||

"body": [

|

||||

{

|

||||

"outputs": ["Bonjour le monde", "Bonjour le monde"],

|

||||

"vars": ["Hello world", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["J'ai faim.", "J'ai faim."],

|

||||

"vars": ["I'm hungry", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["Ahoy thar, world!", "Ahoy thar world!"],

|

||||

"vars": ["Hello world", "Pirate"]

|

||||

},

|

||||

{

|

||||

"outputs": [

|

||||

"Arrr, me belly be empty and yearnin' for grub.",

|

||||

"Arrr, me belly be rumblin'! I be needin' some grub!"

|

||||

],

|

||||

"vars": ["I'm hungry", "Pirate"]

|

||||

}

|

||||

]

|

||||

]

|

||||

}

|

||||

}

|

||||

|

||||

@@ -1,5 +0,0 @@

|

||||

module.exports = {

|

||||

providers: ['openai:gpt-3.5-turbo'],

|

||||

prompts: ['./prompts.txt'],

|

||||

vars: './vars.csv',

|

||||

};

|

||||

16

examples/simple-cli/promptfooconfig.yaml

Normal file

16

examples/simple-cli/promptfooconfig.yaml

Normal file

@@ -0,0 +1,16 @@

|

||||

description: A translator built with LLM

|

||||

prompts: [prompts.txt]

|

||||

providers: [openai:gpt-3.5-turbo]

|

||||

tests:

|

||||

- vars:

|

||||

language: French

|

||||

body: Hello world

|

||||

- vars:

|

||||

language: French

|

||||

body: I'm hungry

|

||||

- vars:

|

||||

language: Pirate

|

||||

body: Hello world

|

||||

- vars:

|

||||

language: Pirate

|

||||

body: I'm hungry

|

||||

@@ -1,3 +1,3 @@

|

||||

Rephrase this in French: {{body}}

|

||||

Rephrase this in {{language}}: {{body}}

|

||||

---

|

||||

Rephrase this like a pirate: {{body}}

|

||||

Translate this to conversational {{language}}: {{body}}

|

||||

|

||||

@@ -1,3 +0,0 @@

|

||||

body

|

||||

Hello world

|

||||

I'm hungry

|

||||

|

11

examples/simple-csv/README.md

Normal file

11

examples/simple-csv/README.md

Normal file

@@ -0,0 +1,11 @@

|

||||

This example is pre-configured in `promptfooconfig.yaml`. Run:

|

||||

|

||||

```

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Here's the full command:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --tests tests.csv --providers openai:gpt-3.5-turbo

|

||||

```

|

||||

92

examples/simple-csv/output.html

Normal file

92

examples/simple-csv/output.html

Normal file

@@ -0,0 +1,92 @@

|

||||

<!DOCTYPE html>

|

||||

<html>

|

||||

<head>

|

||||

<meta charset="utf-8" />

|

||||

<meta name="viewport" content="width=device-width" />

|

||||

<title>Table Output</title>

|

||||

<style>

|

||||

body {

|

||||

font-family: -apple-system, BlinkMacSystemFont, Segoe UI, Roboto, Helvetica, Arial,

|

||||

sans-serif;

|

||||

}

|

||||

table,

|

||||

th,

|

||||

td {

|

||||

border: 1px solid black;

|

||||

border-collapse: collapse;

|

||||

text-align: left;

|

||||

word-break: break-all;

|

||||

}

|

||||

th,

|

||||

td {

|

||||

padding: 5px;

|

||||

min-width: 200px;

|

||||

}

|

||||

|

||||

tr > td[data-content^='[PASS]'] {

|

||||

color: green;

|

||||

}

|

||||

tr > td[data-content^='[FAIL]'] {

|

||||

color: #ad0000;

|

||||

}

|

||||

</style>

|

||||

</head>

|

||||

<body>

|

||||

<table>

|

||||

<thead>

|

||||

<th>Rephrase this in {{language}}: {{body}}</th>

|

||||

|

||||

<th>Translate this to conversational {{language}}: {{body}}</th>

|

||||

|

||||

<th>body</th>

|

||||

|

||||

<th>language</th>

|

||||

</thead>

|

||||

<tbody>

|

||||

<tr>

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td data-content="Bonjour le monde">Bonjour le monde</td>

|

||||

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td data-content="J'ai faim.">J'ai faim.</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="French">French</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Ahoy thar, world!">Ahoy thar, world!</td>

|

||||

|

||||

<td data-content="Ahoy thar world!">Ahoy thar world!</td>

|

||||

|

||||

<td data-content="Hello world">Hello world</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

|

||||

<tr>

|

||||

<td data-content="Arrr, me belly be empty and yearnin' for grub.">

|

||||

Arrr, me belly be empty and yearnin' for grub.

|

||||

</td>

|

||||

|

||||

<td data-content="Arrr, me belly be rumblin'! I be needin' some grub!">

|

||||

Arrr, me belly be rumblin'! I be needin' some grub!

|

||||

</td>

|

||||

|

||||

<td data-content="I'm hungry">I'm hungry</td>

|

||||

|

||||

<td data-content="Pirate">Pirate</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

</body>

|

||||

</html>

|

||||

181

examples/simple-csv/output.json

Normal file

181

examples/simple-csv/output.json

Normal file

@@ -0,0 +1,181 @@

|

||||

{

|

||||

"version": 1,

|

||||

"results": [

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: Hello world",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"cached": 19

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational French: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Bonjour le monde",

|

||||

"tokenUsage": {

|

||||

"cached": 20

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in French: I'm hungry",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"cached": 24

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational French: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "French",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "J'ai faim.",

|

||||

"tokenUsage": {

|

||||

"cached": 25

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: Hello world",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar, world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: Hello world",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "Hello world"

|

||||

},

|

||||

"response": {

|

||||

"output": "Ahoy thar world!",

|

||||

"tokenUsage": {

|

||||

"cached": 23

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Rephrase this in Pirate: I'm hungry",

|

||||

"display": "Rephrase this in {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be empty and yearnin' for grub.",

|

||||

"tokenUsage": {

|

||||

"cached": 33

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

},

|

||||

{

|

||||

"prompt": {

|

||||

"raw": "Translate this to conversational Pirate: I'm hungry",

|

||||

"display": "Translate this to conversational {{language}}: {{body}}"

|

||||

},

|

||||

"vars": {

|

||||

"language": "Pirate",

|

||||

"body": "I'm hungry"

|

||||

},

|

||||

"response": {

|

||||

"output": "Arrr, me belly be rumblin'! I be needin' some grub!",

|

||||

"tokenUsage": {

|

||||

"cached": 39

|

||||

}

|

||||

},

|

||||

"success": true

|

||||

}

|

||||

],

|

||||

"stats": {

|

||||

"successes": 8,

|

||||

"failures": 0,

|

||||

"tokenUsage": {

|

||||

"total": 0,

|

||||

"prompt": 0,

|

||||

"completion": 0,

|

||||

"cached": 206

|

||||

}

|

||||

},

|

||||

"table": {

|

||||

"head": {

|

||||

"prompts": [

|

||||

"Rephrase this in {{language}}: {{body}}",

|

||||

"Translate this to conversational {{language}}: {{body}}"

|

||||

],

|

||||

"vars": ["body", "language"]

|

||||

},

|

||||

"body": [

|

||||

{

|

||||

"outputs": ["Bonjour le monde", "Bonjour le monde"],

|

||||

"vars": ["Hello world", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["J'ai faim.", "J'ai faim."],

|

||||

"vars": ["I'm hungry", "French"]

|

||||

},

|

||||

{

|

||||

"outputs": ["Ahoy thar, world!", "Ahoy thar world!"],

|

||||

"vars": ["Hello world", "Pirate"]

|

||||

},

|

||||

{

|

||||

"outputs": [

|

||||

"Arrr, me belly be empty and yearnin' for grub.",

|

||||

"Arrr, me belly be rumblin'! I be needin' some grub!"

|

||||

],

|

||||

"vars": ["I'm hungry", "Pirate"]

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

4

examples/simple-csv/promptfooconfig.yaml

Normal file

4

examples/simple-csv/promptfooconfig.yaml

Normal file

@@ -0,0 +1,4 @@

|

||||

description: A translator built with LLM

|

||||

prompts: prompts.txt

|

||||

providers: openai:gpt-3.5-turbo

|

||||

tests: tests.csv

|

||||

3

examples/simple-csv/prompts.txt

Normal file

3

examples/simple-csv/prompts.txt

Normal file

@@ -0,0 +1,3 @@

|

||||

Rephrase this in {{language}}: {{body}}

|

||||

---

|

||||

Translate this to conversational {{language}}: {{body}}

|

||||

5

examples/simple-csv/tests.csv

Normal file

5

examples/simple-csv/tests.csv

Normal file

@@ -0,0 +1,5 @@

|

||||

language,body

|

||||

French,Hello world

|

||||

French,I'm hungry

|

||||

Pirate,Hello world

|

||||

Pirate,I'm hungry

|

||||

|

@@ -1,10 +0,0 @@

|

||||

import promptfoo from '../../dist/index.js';

|

||||

|

||||

(async () => {

|

||||

const results = await promptfoo.evaluate('openai:chat', {

|

||||

prompts: ['Rephrase this in French: {{body}}', 'Rephrase this like a pirate: {{body}}'],

|

||||

vars: [{ body: 'Hello world' }, { body: "I'm hungry" }],

|

||||

});

|

||||

console.log('RESULTS:');

|

||||

console.log(results);

|

||||

})();

|

||||

@@ -1,5 +1,14 @@

|

||||

This example shows how you can set an expected value in vars.csv and emit a PASS/FAIL based on it:

|

||||

This example shows a YAML configuration with inline tests.

|

||||

|

||||

Run the test suite with:

|

||||

|

||||

```

|

||||

promptfoo eval --prompts prompts.txt --vars vars.csv --providers openai:chat --output output.html

|

||||

promptfoo eval

|

||||

```

|

||||

|

||||

Note that you can edit the configuration to use a CSV test input instead. Set

|

||||

`tests: tests.csv` and try running it again, or run:

|

||||

|

||||

```

|

||||

promptfoo eval --tests tests.csv

|

||||

```

|

||||

|

||||

@@ -1,3 +1,44 @@

|

||||

RESULT,Rephrase this from English to Pirate: {{body}},Pretend you're a pirate and speak these words: {{body}},body

|

||||

PASS,Ahoy mateys o' the world!,"Ahoy there, me hearties! Avast ye landlubbers! 'Tis I, a fearsome pirate, comin' to ye from the seven seas. Ahoy, hello world!",Hello world

|

||||

PASS,"I be feelin' a mighty need for grub, matey.","Arrr, me belly be rumblin'! I be needin' some grub, mateys! Bring me some vittles or ye'll be walkin' the plank!",I'm hungry

|

||||

Rephrase this from English to Pirate: {{body}},Pretend you're a pirate and speak these words: {{body}},body

|

||||

"Ahoy thar, world!","Ahoy there, me hearties! Avast ye landlubbers! 'Tis I, a fearsome pirate, comin' to ye from the seven seas. Ahoy, hello world!",Hello world

|

||||

"I be feelin' a mighty need for grub, matey.","Arrr, me belly be rumblin'! I be needin' some grub, mateys! Bring me some vittles or ye'll be walkin' the plank!",I'm hungry

|

||||

"""Yarr, me hearties! Spew forth a JSON tale o' yer life!""","[FAIL] Expected Arrr, me hearties! Gather round and listen to the tale of me life as a pirate.

|

||||

|

||||

{

|

||||

""name"": ""Captain Blackbeard"",

|

||||

""age"": 35,

|

||||

""occupation"": ""Pirate"",

|

||||

""location"": ""The Caribbean"",

|

||||

""crew"": [""Redbeard"", ""Long John"", ""Calico Jack""],

|

||||

""ship"": {

|

||||

""name"": ""The Black Pearl"",

|

||||

""type"": ""Galleon"",

|

||||

""weapons"": [""Cannons"", ""Cutlasses"", ""Pistols""]

|

||||

},

|

||||

""treasure"": {

|

||||

""gold"": 50000,

|

||||

""jewels"": [""Diamonds"", ""Emeralds"", ""Rubies""]

|

||||

},

|